elastic stack搭建

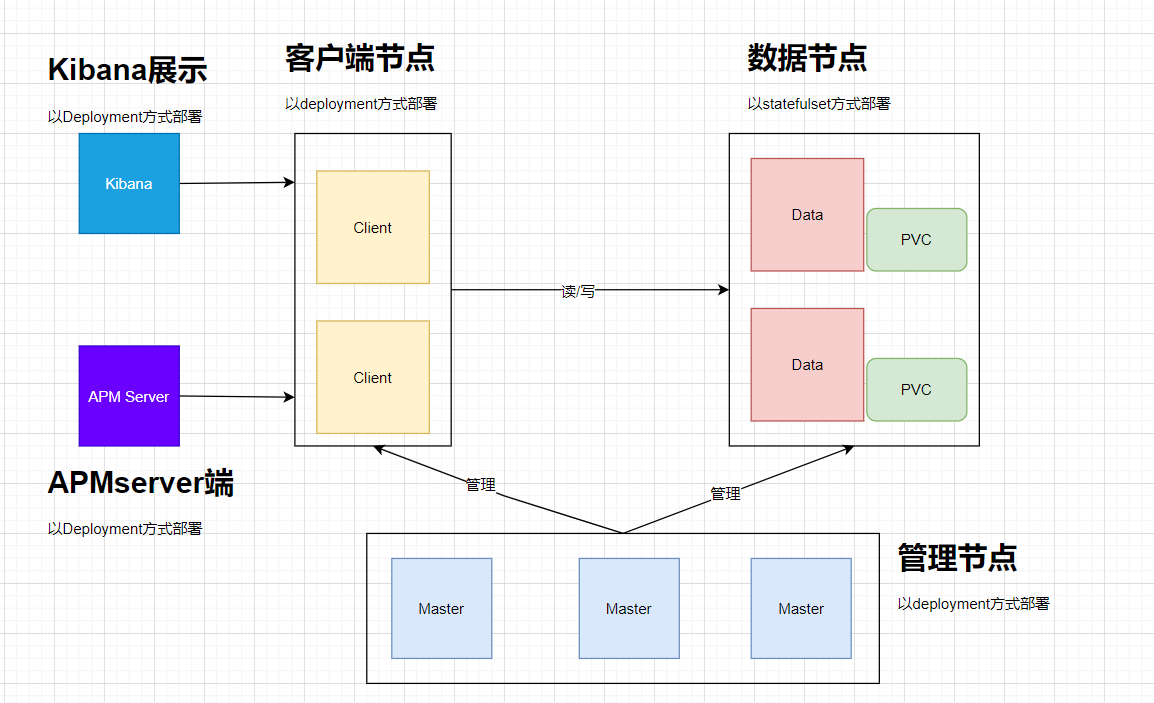

一、架构

为了增加 es 的扩展性,按角色功能分为 master 节点、data 数据节点、client 客户端节点。其整体架构如下:

其中:

- Elasticsearch 数据节点 Pods 被部署为一个有状态集(StatefulSet)

- Elasticsearch master 节点 Pods 被部署为一个 Deployment

- Elasticsearch 客户端节点 Pods 是以 Deployment 的形式部署的,其内部服务将允许访问 R/W 请求的数据节点

- Kibana 和 APMServer 部署为 Deployment,其服务可在 Kubernetes 集群外部访问

1.1、版本说明

| 软件 | 版本 |

|---|---|

| Kibana | 7.8.0 |

| Elasticsearch | 7.8.0 |

| Filebeat | 7.8.0 |

| Kubernetes | 1.17.2 |

| APM-Server | 7.8.0 |

二、部署 ES

先创建 estatic 的命名空间(es-ns.yaml):

apiVersion: v1

kind: Namespace

metadata:

name: elastic执行kubectl apply -f es-ns.yaml

2.1、生成证书

启动 es 的 xpack 功能,传输需要加密传输。

脚本如下(es-create-ca.sh):

#!/bin/bash

# 指定 elasticsearch 版本

RELEASE=7.8.0

# 运行容器生成证书

docker run --name elastic-charts-certs -i -w /app \

elasticsearch:${RELEASE} \

/bin/sh -c " \

elasticsearch-certutil ca --out /app/elastic-stack-ca.p12 --pass '' && \

elasticsearch-certutil cert --name security-master --dns security-master --ca /app/elastic-stack-ca.p12 --pass '' --ca-pass '' --out /app/elastic-certificates.p12" && \

# 从容器中将生成的证书拷贝出来

docker cp elastic-charts-certs:/app/elastic-certificates.p12 ./ && \

# 证书生成成功该容器删除

docker rm -f elastic-charts-certs && \

openssl pkcs12 -nodes -passin pass:'' -in elastic-certificates.p12 -out elastic-certificate.pem生成证书:

chmod +x es-create-ca.sh && ./es-create-ca.sh然后会在本地生成两个文件,如下:

# ll

-rw-r--r-- 1 root root 4650 Oct 14 16:54 elastic-certificate.pem

-rw------- 1 root root 3513 Oct 14 16:54 elastic-certificates.p12将证书以 secret 得方式保存在集群中,如下:

kubectl create secret -n elastic generic elastic-certificates --from-file=elastic-certificates.p12

kubectl create secret -n elastic generic elastic-certificate-pem --from-file=elastic-certificate.pem2.1、部署 es master 节点

配置清单如下(es-master.yaml):

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: elastic

name: elasticsearch-master-config

labels:

app: elasticsearch

role: master

data:

elasticsearch.yml: |-

cluster.name: ${CLUSTER_NAME}

node.name: ${NODE_NAME}

discovery.seed_hosts: ${NODE_LIST}

cluster.initial_master_nodes: ${MASTER_NODES}

network.host: 0.0.0.0

node:

master: true

data: false

ingest: false

xpack.security.enabled: true

xpack.monitoring.collection.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

xpack.ml.enabled: true

xpack.license.self_generated.type: basic

xpack.monitoring.exporters.my_local:

type: local

use_ingest: false

---

apiVersion: v1

kind: Service

metadata:

namespace: elastic

name: elasticsearch-master

labels:

app: elasticsearch

role: master

spec:

ports:

- port: 9300

name: transport

selector:

app: elasticsearch

role: master

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: elastic

name: elasticsearch-master

labels:

app: elasticsearch

role: master

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch

role: master

template:

metadata:

labels:

app: elasticsearch

role: master

spec:

initContainers:

- name: init-sysctl

image: busybox:1.27.2

command:

- sysctl

- -w

- vm.max_map_count=262144

securityContext:

privileged: true

containers:

- name: elasticsearch-master

image: docker.elastic.co/elasticsearch/elasticsearch:7.8.0

env:

- name: CLUSTER_NAME

value: elasticsearch

- name: NODE_NAME

value: elasticsearch-master

- name: NODE_LIST

value: elasticsearch-master,elasticsearch-data,elasticsearch-client

- name: MASTER_NODES

value: elasticsearch-master

- name: "ES_JAVA_OPTS"

value: "-Xms512m -Xmx512m"

ports:

- containerPort: 9300

name: transport

volumeMounts:

- name: config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

readOnly: true

subPath: elasticsearch.yml

- name: storage

mountPath: /usr/share/elasticsearch/data

- name: localtime

mountPath: /etc/localtime

- name: keystore

mountPath: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

readOnly: true

subPath: elastic-certificates.p12

volumes:

- name: config

configMap:

name: elasticsearch-master-config

- name: "storage"

emptyDir:

medium: ""

- name: localtime

hostPath:

path: /etc/localtime

- name: keystore

secret:

secretName: elastic-certificates

defaultMode: 044然后执行kubectl apply -f ``es-master.yaml创建配置清单,然后 pod 变为running状态即为部署成功。

kubectl get pod -n elastic

NAME READY STATUS RESTARTS AGE

elasticsearch-master-77d5d6c9db-xt5kq 1/1 Running 0 67s2.2、部署 es data 节点

配置清单如下(es-data.yaml):

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: elastic

name: elasticsearch-data-config

labels:

app: elasticsearch

role: data

data:

elasticsearch.yml: |-

cluster.name: ${CLUSTER_NAME}

node.name: ${NODE_NAME}

discovery.seed_hosts: ${NODE_LIST}

cluster.initial_master_nodes: ${MASTER_NODES}

network.host: 0.0.0.0

node:

master: false

data: true

ingest: false

xpack.security.enabled: true

xpack.monitoring.collection.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

xpack.ml.enabled: true

xpack.license.self_generated.type: basic

xpack.monitoring.exporters.my_local:

type: local

use_ingest: false

---

apiVersion: v1

kind: Service

metadata:

namespace: elastic

name: elasticsearch-data

labels:

app: elasticsearch

role: data

spec:

ports:

- port: 9300

name: transport

selector:

app: elasticsearch

role: data

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: elastic

name: elasticsearch-data

labels:

app: elasticsearch

role: data

spec:

serviceName: "elasticsearch-data"

selector:

matchLabels:

app: elasticsearch

role: data

template:

metadata:

labels:

app: elasticsearch

role: data

spec:

initContainers:

- name: init-sysctl

image: busybox:1.27.2

command:

- sysctl

- -w

- vm.max_map_count=262144

securityContext:

privileged: true

containers:

- name: elasticsearch-data

image: docker.elastic.co/elasticsearch/elasticsearch:7.8.0

env:

- name: CLUSTER_NAME

value: elasticsearch

- name: NODE_NAME

value: elasticsearch-data

- name: NODE_LIST

value: elasticsearch-master,elasticsearch-data,elasticsearch-client

- name: MASTER_NODES

value: elasticsearch-master

- name: "ES_JAVA_OPTS"

value: "-Xms1024m -Xmx1024m"

ports:

- containerPort: 9300

name: transport

volumeMounts:

- name: config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

readOnly: true

subPath: elasticsearch.yml

- name: elasticsearch-data-persistent-storage

mountPath: /usr/share/elasticsearch/data

- name: keystore

mountPath: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

readOnly: true

subPath: elastic-certificates.p12

volumes:

- name: config

configMap:

name: elasticsearch-data-config

- name: keystore

secret:

secretName: elastic-certificates

defaultMode: 044

volumeClaimTemplates:

- metadata:

name: elasticsearch-data-persistent-storage

spec:

accessModes: ["ReadWriteOnce"]

storageClassName: managed-nfs-storage

resources:

requests:

storage: 20Gi

---执行kubectl apply -f es-data.yaml创建配置清单,其状态变为 running 即为部署成功。

kubectl get pod -n elastic

NAME READY STATUS RESTARTS AGE

elasticsearch-data-0 1/1 Running 0 4s

elasticsearch-master-77d5d6c9db-gklgd 1/1 Running 0 2m35s

elasticsearch-master-77d5d6c9db-gvhcb 1/1 Running 0 2m35s

elasticsearch-master-77d5d6c9db-pflz6 1/1 Running 0 2m35s2.3、部署 es client 节点

配置清单如下(es-client.yaml):

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: elastic

name: elasticsearch-client-config

labels:

app: elasticsearch

role: client

data:

elasticsearch.yml: |-

cluster.name: ${CLUSTER_NAME}

node.name: ${NODE_NAME}

discovery.seed_hosts: ${NODE_LIST}

cluster.initial_master_nodes: ${MASTER_NODES}

network.host: 0.0.0.0

node:

master: false

data: false

ingest: true

xpack.security.enabled: true

xpack.monitoring.collection.enabled: true

xpack.security.transport.ssl.enabled: true

xpack.security.transport.ssl.verification_mode: certificate

xpack.security.transport.ssl.keystore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

xpack.security.transport.ssl.truststore.path: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

xpack.ml.enabled: true

xpack.license.self_generated.type: basic

xpack.monitoring.exporters.my_local:

type: local

use_ingest: false

---

apiVersion: v1

kind: Service

metadata:

namespace: elastic

name: elasticsearch-client

labels:

app: elasticsearch

role: client

spec:

ports:

- port: 9200

name: client

- port: 9300

name: transport

selector:

app: elasticsearch

role: client

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: elastic

name: elasticsearch-client

labels:

app: elasticsearch

role: client

spec:

selector:

matchLabels:

app: elasticsearch

role: client

template:

metadata:

labels:

app: elasticsearch

role: client

spec:

initContainers:

- name: init-sysctl

image: busybox:1.27.2

command:

- sysctl

- -w

- vm.max_map_count=262144

securityContext:

privileged: true

containers:

- name: elasticsearch-client

image: docker.elastic.co/elasticsearch/elasticsearch:7.8.0

env:

- name: CLUSTER_NAME

value: elasticsearch

- name: NODE_NAME

value: elasticsearch-client

- name: NODE_LIST

value: elasticsearch-master,elasticsearch-data,elasticsearch-client

- name: MASTER_NODES

value: elasticsearch-master

- name: "ES_JAVA_OPTS"

value: "-Xms256m -Xmx256m"

ports:

- containerPort: 9200

name: client

- containerPort: 9300

name: transport

volumeMounts:

- name: config

mountPath: /usr/share/elasticsearch/config/elasticsearch.yml

readOnly: true

subPath: elasticsearch.yml

- name: storage

mountPath: /usr/share/elasticsearch/data

- name: keystore

mountPath: /usr/share/elasticsearch/config/certs/elastic-certificates.p12

readOnly: true

subPath: elastic-certificates.p12

volumes:

- name: config

configMap:

name: elasticsearch-client-config

- name: "storage"

emptyDir:

medium: ""

- name: keystore

secret:

secretName: elastic-certificates

defaultMode: 044执行kubectl apply -f es-client.yaml创建配置清单,其状态变为 running 即为部署成功。

kubectl get pod -n elastic

NAME READY STATUS RESTARTS AGE

elasticsearch-client-f79cf4f7b-pbz9d 1/1 Running 0 5s

elasticsearch-data-0 1/1 Running 0 3m11s

elasticsearch-master-77d5d6c9db-gklgd 1/1 Running 0 5m42s

elasticsearch-master-77d5d6c9db-gvhcb 1/1 Running 0 5m42s

elasticsearch-master-77d5d6c9db-pflz6 1/1 Running 0 5m42s2.4、生成密码

我们启用了 xpack 安全模块来保护我们的集群,所以我们需要一个初始化的密码。我们可以执行如下所示的命令,在客户端节点容器内运行 bin/elasticsearch-setup-passwords 命令来生成默认的用户名和密码:

kubectl exec $(kubectl get pods -n elastic -l app=elasticsearch-client --field-selector=status.phase=Running -o jsonpath='{.items[0].metadata.name}') -n elastic -- bin/elasticsearch-setup-passwords auto -b输出:

Changed password for user apm_system

PASSWORD apm_system = hvlXFW1lIn04Us99Mgew

Changed password for user kibana_system

PASSWORD kibana_system = 7Zwfbd250QfV6VcqfY9z

Changed password for user kibana

PASSWORD kibana = 7Zwfbd250QfV6VcqfY9z

Changed password for user logstash_system

PASSWORD logstash_system = tuUsRXDYMOtBEbpTIJgX

Changed password for user beats_system

PASSWORD beats_system = 36HrrpwqOdd7VFAzh8EW

Changed password for user remote_monitoring_user

PASSWORD remote_monitoring_user = bD1vsqJJZoLxGgVciXYR

Changed password for user elastic

PASSWORD elastic = BA72sAEEY1Bphgruxlcw注意需要将 elastic 用户名和密码也添加到 Kubernetes 的 Secret 对象中:

kubectl create secret generic elasticsearch-pw-elastic \

-n elastic \

--from-literal password=BA72sAEEY1Bphgruxlcw

secret/elasticsearch-pw-elastic created2.5、验证集群状态

部署完成后我们需要验证以下集群状态是否正常。使用如下命令:

kubectl exec -it -n elastic elasticsearch-client-f79cf4f7b-pbz9d -- curl -u elastic:BA72sAEEY1Bphgruxlcw http://elasticsearch-client.elastic:9200/_cluster/health?pretty

{

"cluster_name": "elasticsearch",

"status": "green",

"timed_out": false,

"number_of_nodes": 3,

"number_of_data_nodes": 1,

"active_primary_shards": 2,

"active_shards": 2,

"relocating_shards": 0,

"initializing_shards": 0,

"unassigned_shards": 0,

"delayed_unassigned_shards": 0,

"number_of_pending_tasks": 0,

"number_of_in_flight_fetch": 0,

"task_max_waiting_in_queue_millis": 0,

"active_shards_percent_as_number": 100.0,

}我们可以看到集群状态是green,表示正常。

三、部署 Kibana

Kibana 是一个简单的可视化 ES 数据的工具,其 yaml 清单如下:

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: elastic

name: kibana-config

labels:

app: kibana

data:

kibana.yml: |-

server.host: 0.0.0.0

elasticsearch:

hosts: ${ELASTICSEARCH_HOSTS}

username: ${ELASTICSEARCH_USER}

password: ${ELASTICSEARCH_PASSWORD}

---

apiVersion: v1

kind: Service

metadata:

namespace: elastic

name: kibana

labels:

app: kibana

spec:

ports:

- port: 5601

name: webinterface

selector:

app: kibana

---

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

annotations:

prometheus.io/http-probe: "true"

prometheus.io/scrape: "true"

name: kibana

namespace: elastic

spec:

rules:

- host: kibana.coolops.cn

http:

paths:

- backend:

serviceName: kibana

servicePort: 5601

path: /

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: elastic

name: kibana

labels:

app: kibana

spec:

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.8.0

ports:

- containerPort: 5601

name: webinterface

env:

- name: ELASTICSEARCH_HOSTS

value: "http://elasticsearch-client.elastic.svc.cluster.local:9200"

- name: ELASTICSEARCH_USER

value: "elastic"

- name: ELASTICSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: elasticsearch-pw-elastic

key: password

volumeMounts:

- name: config

mountPath: /usr/share/kibana/config/kibana.yml

readOnly: true

subPath: kibana.yml

volumes:

- name: config

configMap:

name: kibana-config

---然后执行kubectl apply -f kibana.yaml创建 kibana,查看 pod 的状态是否为 running。

kubectl get pod -n elastic

NAME READY STATUS RESTARTS AGE

elasticsearch-client-f79cf4f7b-pbz9d 1/1 Running 0 30m

elasticsearch-data-0 1/1 Running 0 33m

elasticsearch-master-77d5d6c9db-gklgd 1/1 Running 0 36m

elasticsearch-master-77d5d6c9db-gvhcb 1/1 Running 0 36m

elasticsearch-master-77d5d6c9db-pflz6 1/1 Running 0 36m

kibana-6b9947fccb-4vp29 1/1 Running 0 3m51s如下图所示,使用上面我们创建的 Secret 对象的 elastic 用户和生成的密码即可登录:

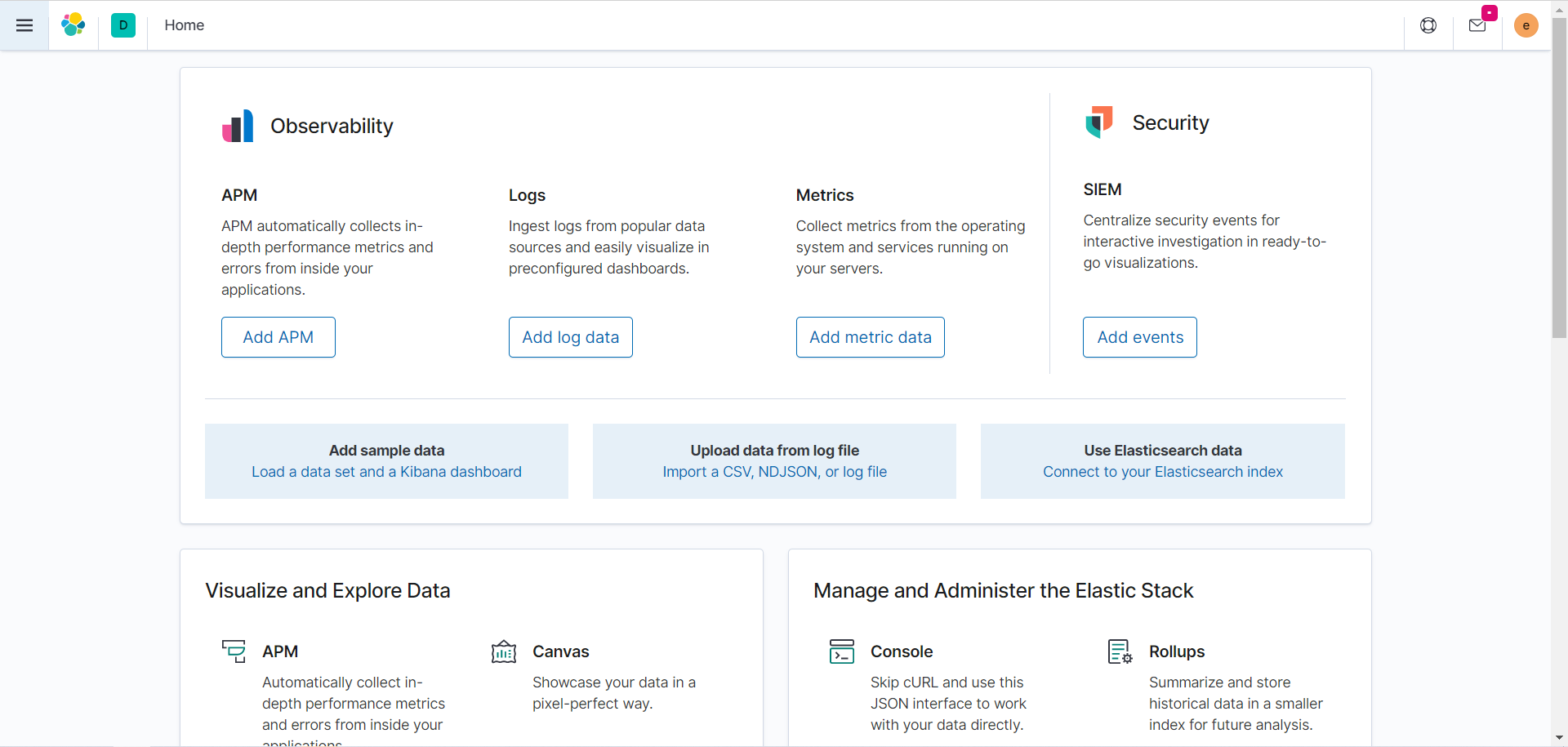

登录成功后即可进入以下界面。

四、部署 Elastic APM

Elastic APM 是 Elastic Stack 上用于应用性能监控的工具,它允许我们通过收集传入请求、数据库查询、缓存调用等方式来实时监控应用性能。这可以让我们更加轻松快速定位性能问题。

Elastic APM 是兼容 OpenTracing 的,所以我们可以使用大量现有的库来跟踪应用程序性能。比如我们可以在一个分布式环境(微服务架构)中跟踪一个请求,并轻松找到可能潜在的性能瓶颈。

Elastic APM 通过一个名为 APM-Server 的组件提供服务,用于收集并向 ElasticSearch 以及和应用一起运行的 agent 程序发送追踪数据。

4.1、安装 APM Server

配置清单如下(apm-server.yaml):

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: elastic

name: apm-server-config

labels:

app: apm-server

data:

apm-server.yml: |-

apm-server:

host: "0.0.0.0:8200"

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

username: ${ELASTICSEARCH_USERNAME}

password: ${ELASTICSEARCH_PASSWORD}

setup.kibana:

host: '${KIBANA_HOST:kibana}:${KIBANA_PORT:5601}'

---

apiVersion: v1

kind: Service

metadata:

namespace: elastic

name: apm-server

labels:

app: apm-server

spec:

ports:

- port: 8200

name: apm-server

selector:

app: apm-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: elastic

name: apm-server

labels:

app: apm-server

spec:

replicas: 1

selector:

matchLabels:

app: apm-server

template:

metadata:

labels:

app: apm-server

spec:

containers:

- name: apm-server

image: docker.elastic.co/apm/apm-server:7.8.0

env:

- name: ELASTICSEARCH_HOST

value: elasticsearch-client.elastic.svc.cluster.local

- name: ELASTICSEARCH_PORT

value: "9200"

- name: ELASTICSEARCH_USERNAME

value: elastic

- name: ELASTICSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: elasticsearch-pw-elastic

key: password

- name: KIBANA_HOST

value: kibana.elastic.svc.cluster.local

- name: KIBANA_PORT

value: "5601"

ports:

- containerPort: 8200

name: apm-server

volumeMounts:

- name: config

mountPath: /usr/share/apm-server/apm-server.yml

readOnly: true

subPath: apm-server.yml

volumes:

- name: config

configMap:

name: apm-server-config然后执行kubectl apply -f apm-server.yaml,查看其 pod 状态,当其成为running则代表启动成功。

kubectl get pod -n elastic

NAME READY STATUS RESTARTS AGE

apm-server-667bfc5cff-7vqsd 1/1 Running 0 91s

elasticsearch-client-f79cf4f7b-pbz9d 1/1 Running 0 177m

elasticsearch-data-0 1/1 Running 0 3h

elasticsearch-master-77d5d6c9db-gklgd 1/1 Running 0 3h3m

elasticsearch-master-77d5d6c9db-gvhcb 1/1 Running 0 3h3m

elasticsearch-master-77d5d6c9db-pflz6 1/1 Running 0 3h3m

kibana-6b9947fccb-4vp29 1/1 Running 0 150m4.2、部署 APM Agent

这里以 Java agent 为例。

接下来我们在示例应用程序 spring-boot-simple 上配置一个 Elastic APM Java agent。

首先我们需要把 elastic-apm-agent-1.8.0.jar 这个 jar 包程序内置到应用容器中去,在构建镜像的 Dockerfile 文件中添加一行如下所示的命令直接下载该 JAR 包即可:

RUN wget -O /apm-agent.jar https://search.maven.org/remotecontent?filepath=co/elastic/apm/elastic-apm-agent/1.8.0/elastic-apm-agent-1.8.0.jar完整的 Dockerfile 文件如下所示:

FROM openjdk:8-jdk-alpine

ENV ELASTIC_APM_VERSION "1.8.0"RUN wget -O /apm-agent.jar https://search.maven.org/remotecontent?filepath=co/elastic/apm/elastic-apm-agent/$ELASTIC_APM_VERSION/elastic-apm-agent-$ELASTIC_APM_VERSION.jar

COPY target/spring-boot-simple.jar /app.jar

CMD java -jar /app.jar然后需要在示例应用中添加上如下依赖关系,这样我们就可以集成 open-tracing 的依赖库或者使用 Elastic APM API 手动检测。

<dependency>

<groupId>co.elastic.apm</groupId>

<artifactId>apm-agent-api</artifactId>

<version>${elastic-apm.version}</version>

</dependency>

<dependency>

<groupId>co.elastic.apm</groupId>

<artifactId>apm-opentracing</artifactId>

<version>${elastic-apm.version}</version>

</dependency>

<dependency>

<groupId>io.opentracing.contrib</groupId>

<artifactId>opentracing-spring-cloud-mongo-starter</artifactId>

<version>${opentracing-spring-cloud.version}</version>

</dependency>然后部署一个示例代码,用于验证。

(1)、先部署 mongo,yaml 清单如下:

---

apiVersion: v1

kind: Service

metadata:

name: mongo

namespace: elastic

labels:

app: mongo

spec:

ports:

- port: 27017

protocol: TCP

selector:

app: mongo

---

apiVersion: apps/v1

kind: StatefulSet

metadata:

namespace: elastic

name: mongo

labels:

app: mongo

spec:

serviceName: "mongo"

selector:

matchLabels:

app: mongo

template:

metadata:

labels:

app: mongo

spec:

containers:

- name: mongo

image: mongo

ports:

- containerPort: 27017(2)、部署 java 应用,yaml 清单如下:

---

apiVersion: v1

kind: Service

metadata:

namespace: elastic

name: spring-boot-simple

labels:

app: spring-boot-simple

spec:

type: NodePort

ports:

- port: 8080

protocol: TCP

selector:

app: spring-boot-simple

---

apiVersion: apps/v1

kind: Deployment

metadata:

namespace: elastic

name: spring-boot-simple

labels:

app: spring-boot-simple

spec:

selector:

matchLabels:

app: spring-boot-simple

template:

metadata:

labels:

app: spring-boot-simple

spec:

containers:

- image: gjeanmart/spring-boot-simple:0.0.1-SNAPSHOT

imagePullPolicy: Always

name: spring-boot-simple

command:

- "java"

- "-javaagent:/apm-agent.jar"

- "-Delastic.apm.active=$(ELASTIC_APM_ACTIVE)"

- "-Delastic.apm.server_urls=$(ELASTIC_APM_SERVER)"

- "-Delastic.apm.service_name=spring-boot-simple"

- "-jar"

- "app.jar"

env:

- name: SPRING_DATA_MONGODB_HOST

value: mongo

- name: ELASTIC_APM_ACTIVE

value: "true"

- name: ELASTIC_APM_SERVER

value: http://apm-server.elastic.svc.cluster.local:8200

ports:

- containerPort: 8080

---部署后观察 pod 的状态是否变为 running。

kubectl get pod -n elastic

NAME READY STATUS RESTARTS AGE

apm-server-667bfc5cff-7vqsd 1/1 Running 0 34m

elasticsearch-client-f79cf4f7b-pbz9d 1/1 Running 0 3h30m

elasticsearch-data-0 1/1 Running 0 3h33m

elasticsearch-master-77d5d6c9db-gklgd 1/1 Running 0 3h36m

elasticsearch-master-77d5d6c9db-gvhcb 1/1 Running 0 3h36m

elasticsearch-master-77d5d6c9db-pflz6 1/1 Running 0 3h36m

kibana-6b9947fccb-4vp29 1/1 Running 0 3h3m

mongo-0 1/1 Running 0 11m

spring-boot-simple-fb5564885-rvh6q 1/1 Running 0 80s测试应用。

curl -X GET 172.17.100.50:30809

# Greetings from Spring Boot!

# 获取所有发布的 messages 数据:

curl -X GET 172.17.100.50:30809/message

# 使用 sleep=<ms> 来模拟慢请求:

curl -X GET 172.17.100.50:30809/message?sleep=3000

# 使用 error=true 来触发一异常:

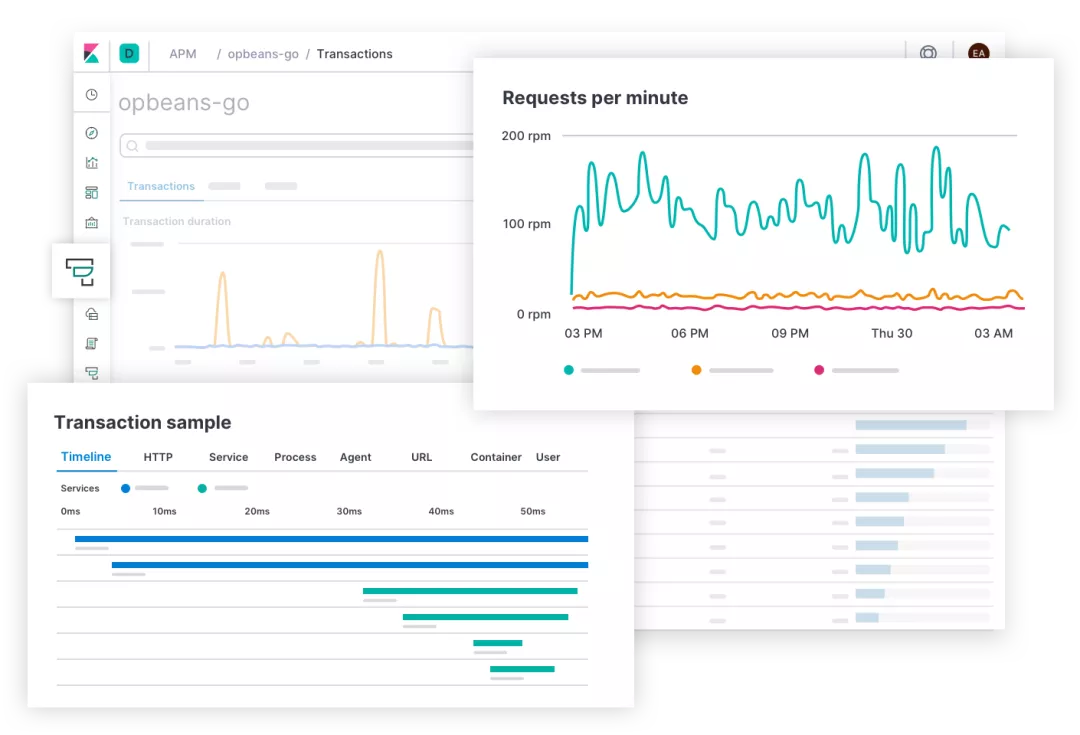

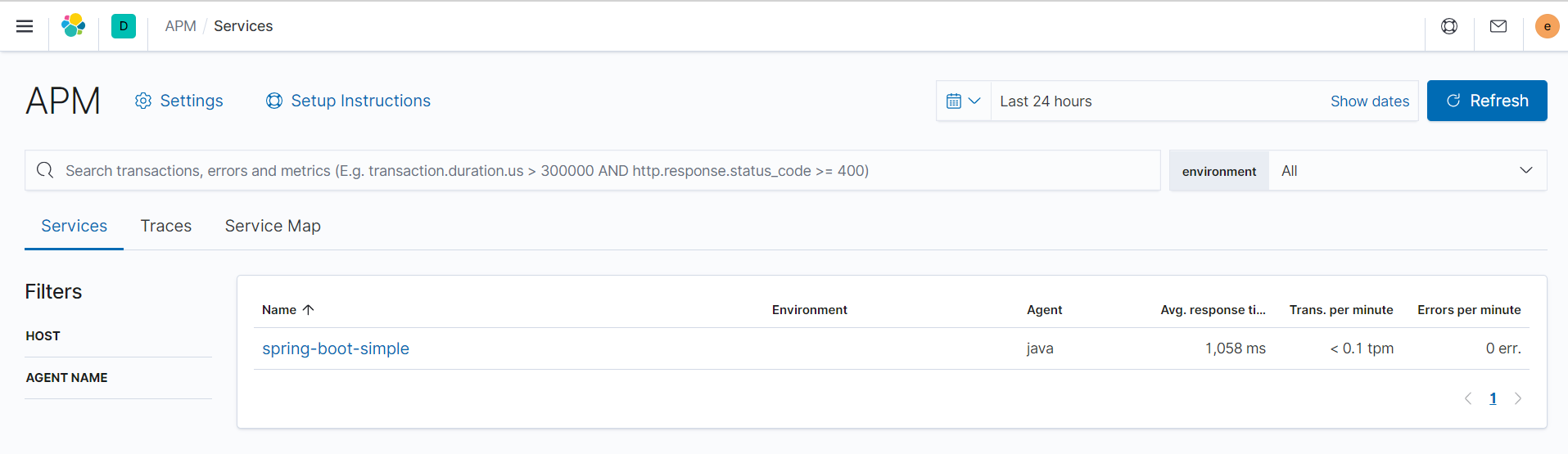

curl -X GET 172.17.100.50:30809/message?error=true然后我们可以在 kibane 的 APM 页面看到应用以及其数据了。

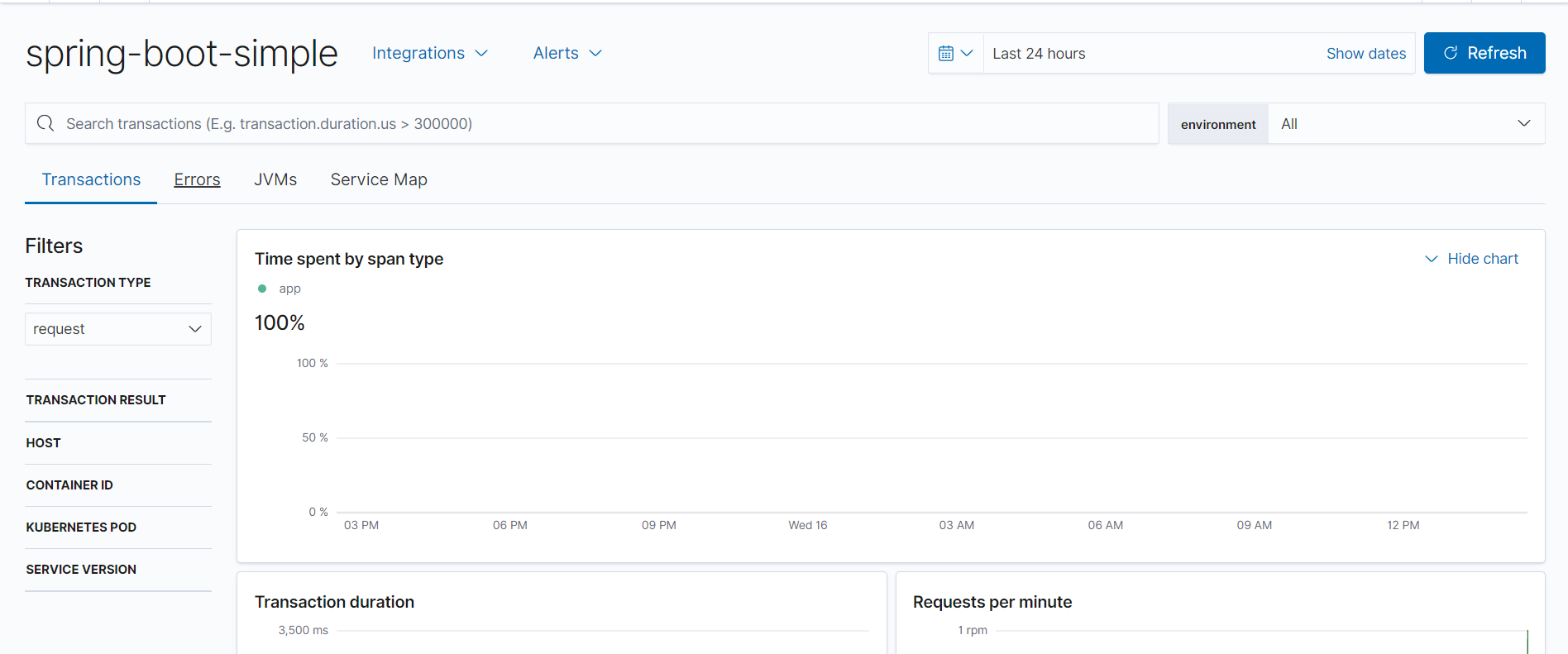

点击应用可以查看其性能追踪。

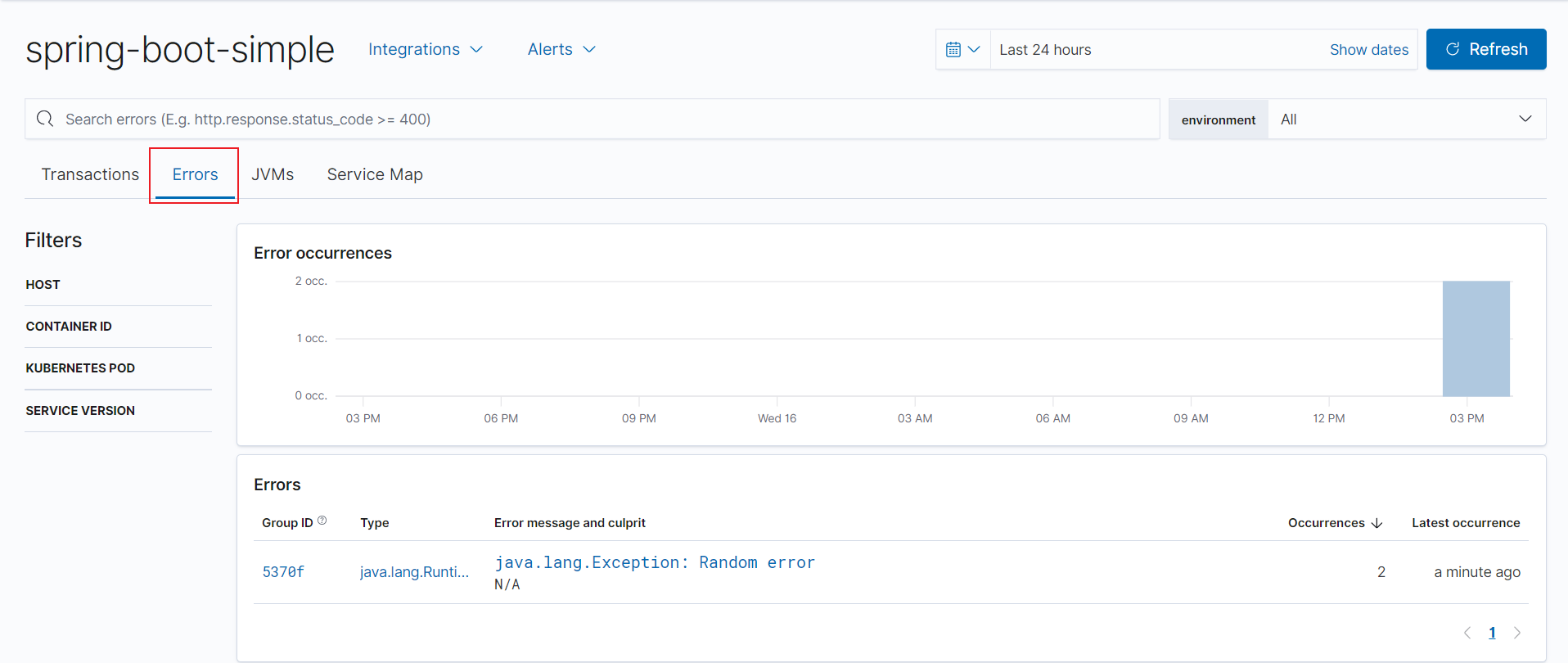

点击错误,可以查看错误数据。

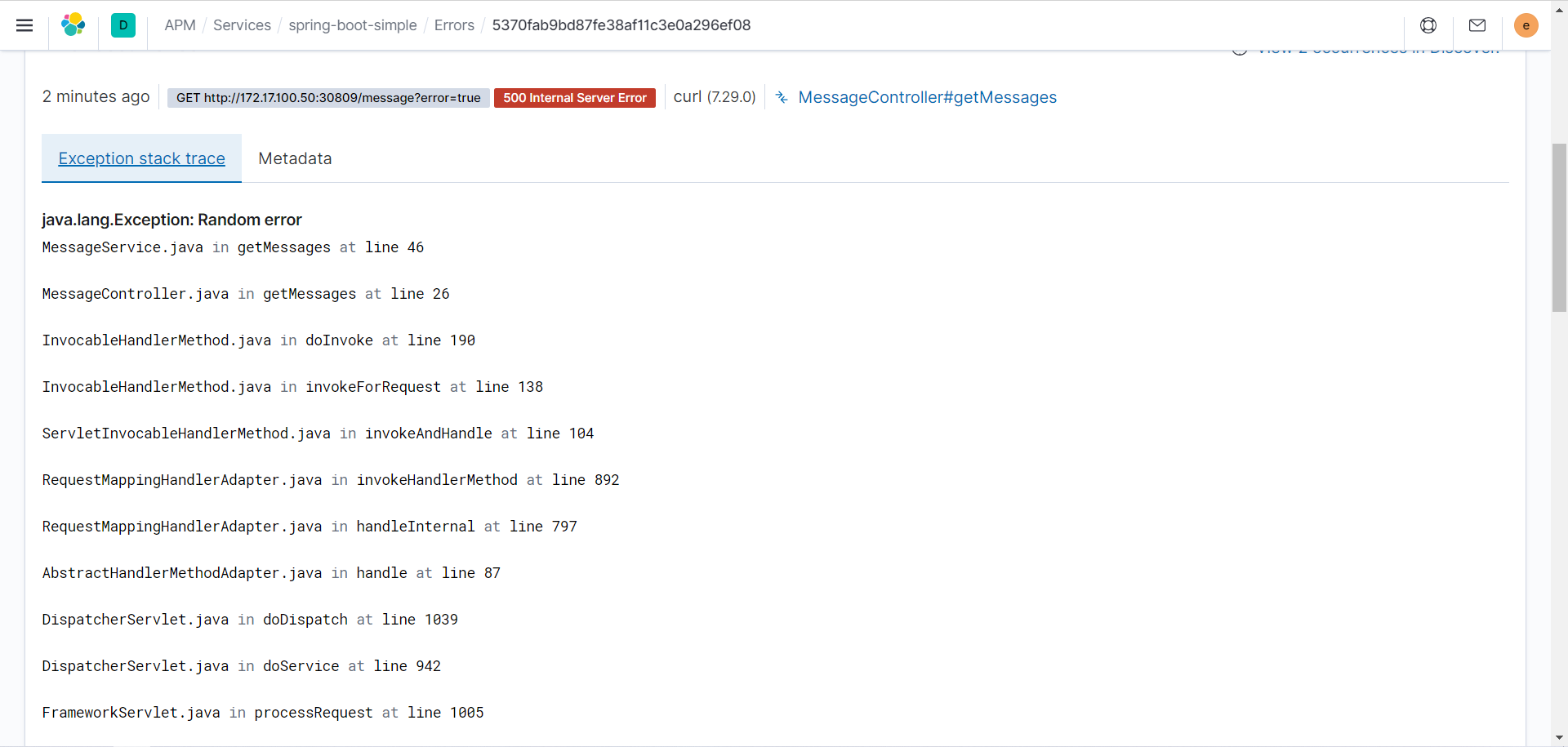

而且还可以查到详细的错误信息。

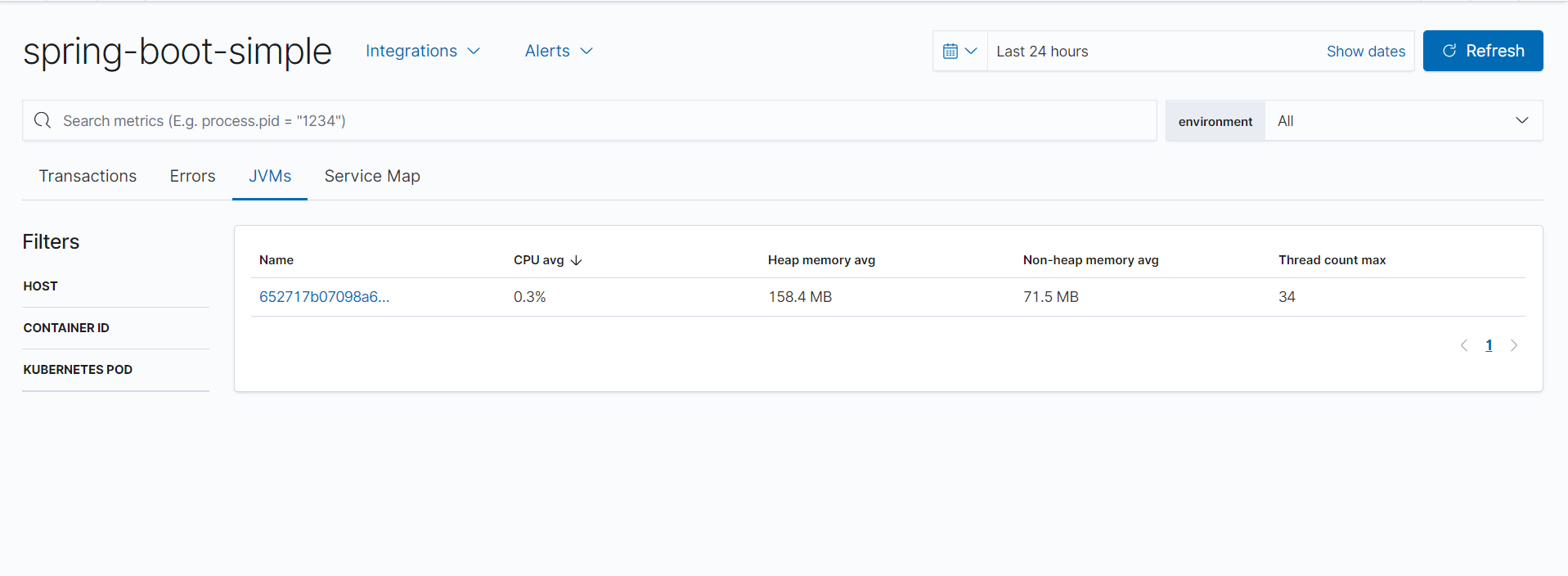

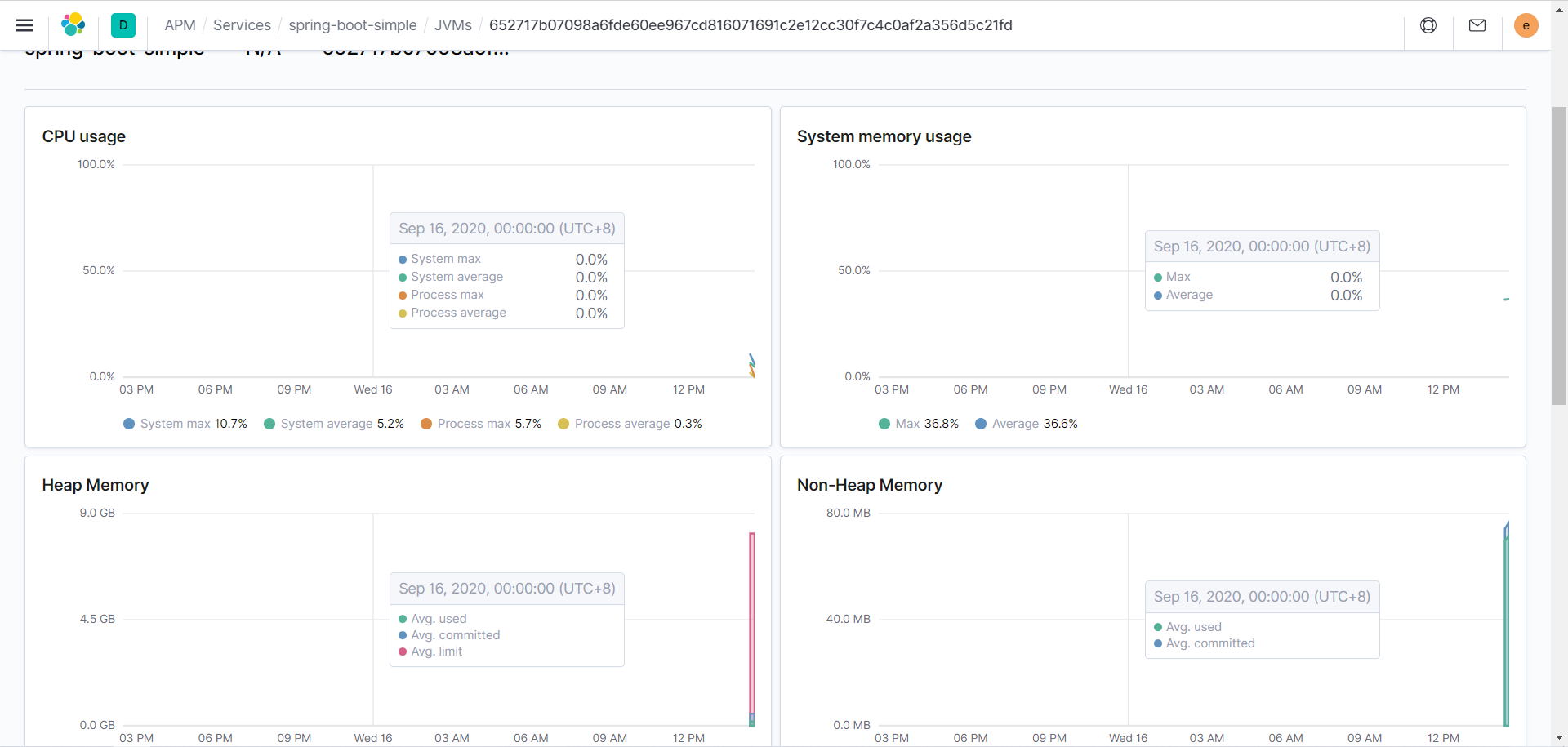

还可以查看 JVM 的数据监控。

可以查看一些详细的数据。

五、采集日志

使用 filebeat 采集日志。配置清单如下:

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: elastic

name: filebeat-indice-lifecycle

labels:

app: filebeat

data:

indice-lifecycle.json: |-

{

"policy": {

"phases": {

"hot": {

"actions": {

"rollover": {

"max_size": "5GB" ,

"max_age": "1d"

}

}

},

"delete": {

"min_age": "3d",

"actions": {

"delete": {}

}

}

}

}

}

---

---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: elastic

name: filebeat-config

labels:

app: filebeat

data:

filebeat.yml: |-

filebeat.inputs:

- type: container

enabled: true

paths:

- /var/log/containers/*.log

processors:

- add_kubernetes_metadata:

in_cluster: true

host: ${NODE_NAME}

matchers:

- logs_path:

logs_path: "/var/log/containers/"

filebeat.autodiscover:

providers:

- type: kubernetes

templates:

- condition.equals:

kubernetes.labels.app: mongo

config:

- module: mongodb

enabled: true

log:

input:

type: docker

containers.ids:

- ${data.kubernetes.container.id}

processors:

- drop_event:

when.or:

- and:

- regexp:

message: '^\d+\.\d+\.\d+\.\d+ '

- equals:

fileset.name: error

- and:

- not:

regexp:

message: '^\d+\.\d+\.\d+\.\d+ '

- equals:

fileset.name: access

- add_cloud_metadata:

- add_kubernetes_metadata:

matchers:

- logs_path:

logs_path: "/var/log/containers/"

- add_docker_metadata:

output.elasticsearch:

hosts: ['${ELASTICSEARCH_HOST:elasticsearch}:${ELASTICSEARCH_PORT:9200}']

username: ${ELASTICSEARCH_USERNAME}

password: ${ELASTICSEARCH_PASSWORD}

setup.kibana:

host: '${KIBANA_HOST:kibana}:${KIBANA_PORT:5601}'

setup.dashboards.enabled: true

setup.template.enabled: true

setup.ilm:

policy_file: /etc/indice-lifecycle.json

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

namespace: elastic

name: filebeat

labels:

app: filebeat

spec:

selector:

matchLabels:

app: filebeat

template:

metadata:

labels:

app: filebeat

spec:

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: docker.elastic.co/beats/filebeat:7.8.0

args: ["-c", "/etc/filebeat.yml", "-e"]

env:

- name: ELASTICSEARCH_HOST

value: elasticsearch-client.elastic.svc.cluster.local

- name: ELASTICSEARCH_PORT

value: "9200"

- name: ELASTICSEARCH_USERNAME

value: elastic

- name: ELASTICSEARCH_PASSWORD

valueFrom:

secretKeyRef:

name: elasticsearch-pw-elastic

key: password

- name: KIBANA_HOST

value: kibana.elastic.svc.cluster.local

- name: KIBANA_PORT

value: "5601"

- name: NODE_NAME

valueFrom:

fieldRef:

fieldPath: spec.nodeName

securityContext:

runAsUser: 0

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: filebeat-indice-lifecycle

mountPath: /etc/indice-lifecycle.json

readOnly: true

subPath: indice-lifecycle.json

- name: data

mountPath: /usr/share/filebeat/data

- name: varlog

mountPath: /var/log

readOnly: true

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

- name: dockersock

mountPath: /var/run/docker.sock

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: filebeat-indice-lifecycle

configMap:

defaultMode: 0600

name: filebeat-indice-lifecycle

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: dockersock

hostPath:

path: /var/run/docker.sock

- name: data

hostPath:

path: /var/lib/filebeat-data

type: DirectoryOrCreate

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: elastic

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1beta1

kind: ClusterRole

metadata:

name: filebeat

labels:

app: filebeat

rules:

- apiGroups: [""]

resources:

- namespaces

- pods

verbs:

- get

- watch

- list

---

apiVersion: v1

kind: ServiceAccount

metadata:

namespace: elastic

name: filebeat

labels:

app: filebeat

---上面是采集 containers 的日志。

参考文档: