作者:乔克

公众号:运维开发故事

博客:https://www.jokerbai.com

知乎:乔克叔叔

大家好,我是乔克。

在 Kubernetes 中,Pod 是最小的调度单元,它由各种各样的 Controller 管理,比如 ReplicaSet Controller,Deployment Controller 等。

Kubernetes 内置了许多 Controller,这些 Controller 能满足 80% 的业务需求,但是企业里也难免需要自定义 Controller 来适配自己的业务需求。

网上自定义 Controller 的文章很多,基本都差不多。俗话说:光说不练假把式,本篇文章主要是自己的一个实践归档总结,如果对你有帮助,可以一键三连!

本文主要从以下几个方面进行介绍,其中包括理论部分和具体实践部分。

Controller 的实现逻辑

当我们向 kube-apiserver 提出创建一个 Deployment 需求的时候,首先是会把这个需求存储到 Etcd 中,如果这时候没有 Controller 的话,这条数据仅仅是存在 Etcd 中,并没有产生实际的作用。

所以就有了 Deployment Controller,它实时监听 kube-apiserver 中的 Deployment 对象,如果对象有增加、删除、修改等变化,它就会做出相应的相应处理,如下:

// pkg/controller/deployment/deployment_controller.go 121行

.....

dInformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: dc.addDeployment,

UpdateFunc: dc.updateDeployment,

// This will enter the sync loop and no-op, because the deployment has been deleted from the store.

DeleteFunc: dc.deleteDeployment,

})

......

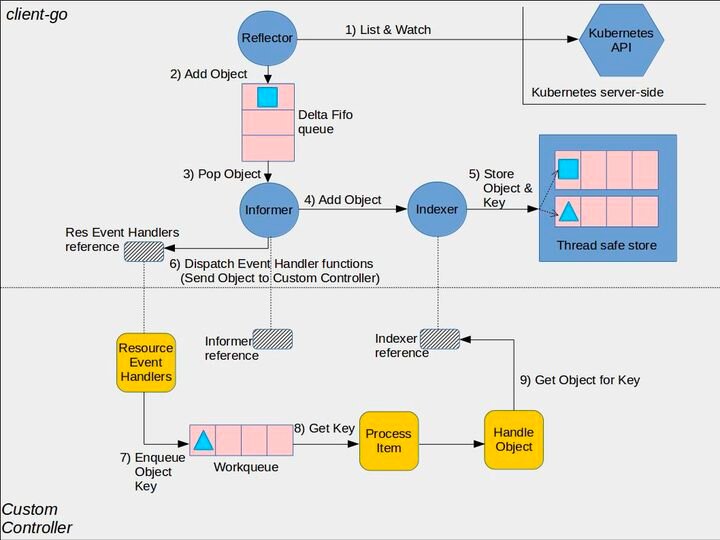

其实现的逻辑图如下(图片来自网络):

可以看到图的上半部分都由 client-go 实现了,下半部分才是我们具体需要去处理的。

client-go 主要包含 Reflector、Informer、Indexer 三个组件。

Reflector会List&Watchkube-apiserver 中的特定资源,然后会把变化的资源放入Delta FIFO队列中。Informer会从Delta FIFO队列中拿取对象交给相应的HandleDeltas。Indexer会将对象存储到缓存中。

上面部分不需要我们去开发,我们主要关注下半部分。

当把数据交给 Informer 的回调函数 HandleDeltas 后,Distribute 会将资源对象分发到具体的处理函数,这些处理函数通过一系列判断过后,把满足需求的对象放入 Workqueue 中,然后再进行后续的处理。

code-generator 介绍

上一节说到我们只需要去实现具体的业务需求,这是为什么呢?主要是因为 kubernetes 为我们提供了 code-generator【1】这样的代码生成器工具,可以通过它自动生成客户端访问的一些代码,比如 Informer、ClientSet 等。

code-generator 提供了以下工具为 Kubernetes 中的资源生成代码:

- deepcopy-gen:生成深度拷贝方法,为每个 T 类型生成 func (t* T) DeepCopy() *T 方法,API 类型都需要实现深拷贝

- client-gen:为资源生成标准的 clientset

- informer-gen:生成 informer,提供事件机制来响应资源的事件

- lister-gen:生成 Lister**,** 为 get 和 list 请求提供只读缓存层(通过 indexer 获取)

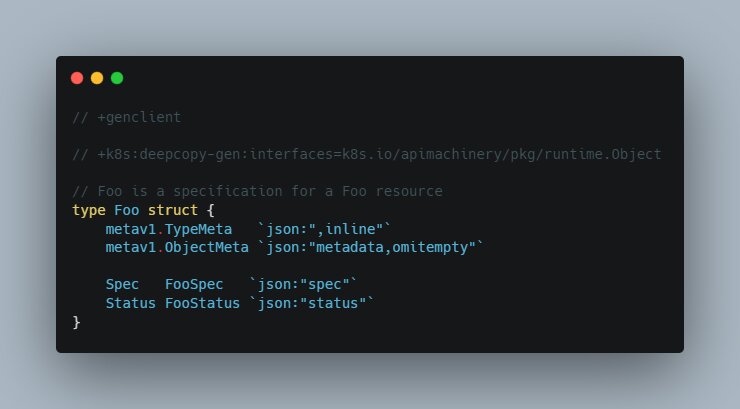

如果需要自动生成,就需要在代码中加入对应格式的配置,如下:

其中:

// +genclient表示需要创建 client// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object表示在需要实现k8s.io/apimachinery/pkg/runtime.Object这个接口

除此还有更多的用法,可以参考 Kubernetes Deep Dive: Code Generation for CustomResources【2】进行学习。

CRD 介绍

CRD 全称 CustomResourceDefinition,中文简称自定义资源,上面说的 Controller 主要就是用来管理自定义的资源。

我们可以通过下面命令来查看当前集群中使用了哪些 CRD,如下:

# kubectl get crd

NAME CREATED AT

ackalertrules.alert.alibabacloud.com 2021-06-15T02:19:59Z

alertmanagers.monitoring.coreos.com 2019-12-12T12:50:00Z

aliyunlogconfigs.log.alibabacloud.com 2019-12-02T10:15:02Z

apmservers.apm.k8s.elastic.co 2020-09-14T01:52:53Z

batchreleases.alicloud.com 2019-12-02T10:15:53Z

beats.beat.k8s.elastic.co 2020-09-14T01:52:53Z

chaosblades.chaosblade.io 2021-06-15T02:30:54Z

elasticsearches.elasticsearch.k8s.elastic.co 2020-09-14T01:52:53Z

enterprisesearches.enterprisesearch.k8s.elastic.co 2020-09-14T01:52:53Z

globaljobs.jobs.aliyun.com 2020-04-26T14:40:53Z

kibanas.kibana.k8s.elastic.co 2020-09-14T01:52:54Z

prometheuses.monitoring.coreos.com 2019-12-12T12:50:01Z

prometheusrules.monitoring.coreos.com 2019-12-12T12:50:02Z

servicemonitors.monitoring.coreos.com 2019-12-12T12:50:03Z

但是仅仅是创建一个 CRD 对象是不够的,因为它是静态的,创建过后仅仅是保存在 Etcd 中,如果需要其有意义,就需要 Controller 配合。

创建 CRD 的例子如下:

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

# name 必须匹配下面的spec字段:<plural>.<group>

name: students.coolops.io

spec:

# group 名用于 REST API 中的定义:/apis/<group>/<version>

group: coolops.io

# 列出自定义资源的所有 API 版本

versions:

- name: v1 # 版本名称,比如 v1、v1beta1

served: true # 是否开启通过 REST APIs 访问 `/apis/<group>/<version>/...`

storage: true # 必须将一个且只有一个版本标记为存储版本

schema: # 定义自定义对象的声明规范

openAPIV3Schema:

type: object

properties:

spec:

type: object

properties:

name:

type: string

school:

type: string

scope: Namespaced # 定义作用范围:Namespaced(命名空间级别)或者 Cluster(整个集群)

names:

plural: students # plural 名字用于 REST API 中的定义:/apis/<group>/<version>/<plural>

shortNames: # shortNames 相当于缩写形式

- stu

kind: Student # kind 是 sigular 的一个驼峰形式定义,在资源清单中会使用

singular: student # singular 名称用于 CLI 操作或显示的一个别名

具体演示

本来准备根据官方的 demo【3】进行讲解,但是感觉有点敷衍,而且这类教程网上一大堆,所以就准备自己实现一个数据库管理的一个 Controller。

因为是演示怎么开发 Controller,所以功能不会复杂,主要的功能是:

- 创建数据库实例

- 删除数据库实例

- 更新数据库实例

开发环境说明

本次实验环境如下:

| 软件 | 版本 |

|---|---|

| kubernetes | v1.22.3 |

| go | 1.17.3 |

| 操作系统 | CentOS 7.6 |

创建 CRD

CRD 是基础,Controller 主要是为 CRD 服务的,所以我们要先定义好 CRD 资源,便于开发。

apiVersion: apiextensions.k8s.io/v1

kind: CustomResourceDefinition

metadata:

name: databasemanagers.coolops.cn

spec:

group: coolops.cn

versions:

- name: v1alpha1

served: true

storage: true

schema:

openAPIV3Schema:

type: object

properties:

spec:

type: object

properties:

deploymentName:

type: strin

replicas:

type: integer

minimum: 1

maximum: 10

dbtype:

type: string

status:

type: object

properties:

availableReplicas:

type: integer

names:

kind: DatabaseManager

plural: databasemanagers

singular: databasemanager

shortNames:

- dm

scope: Namespaced

创建 CRD,检验是否能创建成功。

# kubectl apply -f crd.yaml

customresourcedefinition.apiextensions.k8s.io/databasemanagers.coolops.cn created

# kubectl get crd | grep databasemanagers

databasemanagers.coolops.cn 2021-11-22T02:31:29Z

自定义一个测试用例,如下:

apiVersion: coolops.cn/v1alpha1

kind: DatabaseManager

metadata:

name: example-mysql

spec:

dbtype: "mysql"

deploymentName: "example-mysql"

replicas: 1

创建后进行查看:

# kubectl apply -f example-mysql.yaml

databasemanager.coolops.cn/example-mysql created

# kubectl get dm

NAME AGE

example-mysql 9s

不过现在仅仅是创建了一个静态数据,并没有任何实际的应用,下面来编写 Controller 来管理这个 CRD。

开发 Controller

自动生成代码

1、创建项目目录 database-manager-controller,并进行 go mod 初始化

# mkdir database-manager-controller

# cd database-manager-controller

# go mod init

2、创建源码包目录 pkg/apis/databasemanager

# mkdir pkg/apis/databasemanager -p

# cd pkg/apis/databasemanager

3、在 pkg/apis/databasemanager 目录下创建 register.go 文件,并写入一下内容

package databasemanager

// GroupName is the group for database manager

const (

GroupName = "coolops.cn"

)

4、在 pkg/apis/databasemanager 目录下创建 v1alpha1 目录,进行版本管理

# mkdir v1alpha1

# cd v1alpha1

5、在 v1alpha1 目录下创建 doc.go 文件,并写入以下内容

// +k8s:deepcopy-gen=package

// +groupName=coolops.cn

// Package v1alpha1 is the v1alpha1 version of the API

package v1alpha1

其中 // +k8s:deepcopy-gen=package 和 // +groupName=coolops.cn 都是为了自动生成代码而写的配置。

6、在 v1alpha1 目录下创建 type.go 文件,并写入以下内容

package v1alpha1

import metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

// +genclient

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

type DatabaseManager struct {

metav1.TypeMeta `json:",inline"`

metav1.ObjectMeta `json:"metadata,omitempty"`

Spec DatabaseManagerSpec `json:"spec"`

Status DatabaseManagerStatus `json:"status"`

}

// DatabaseManagerSpec 期望状态

type DatabaseManagerSpec struct {

DeploymentName string `json:"deploymentName"`

Replicas *int32 `json:"replicas"`

Dbtype string `json:"dbtype"`

}

// DatabaseManagerStatus 当前状态

type DatabaseManagerStatus struct {

AvailableReplicas int32 `json:"availableReplicas"`

}

// +k8s:deepcopy-gen:interfaces=k8s.io/apimachinery/pkg/runtime.Object

// DatabaseManagerList is a list of DatabaseManagerList resources

type DatabaseManagerList struct {

metav1.TypeMeta `json:",inline"`

metav1.ListMeta `json:"metadata"`

Items []DatabaseManager `json:"items"`

}

type.go 主要定义我们的资源类型。

7、在 v1alpha1 目录下创建 register.go 文件,并写入以下内容

package v1alpha1

import (

dbcontroller "database-manager-controller/pkg/apis/databasemanager"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/apimachinery/pkg/runtime"

"k8s.io/apimachinery/pkg/runtime/schema"

)

// SchemeGroupVersion is group version used to register these objects

var SchemeGroupVersion = schema.GroupVersion{Group: dbcontroller.GroupName, Version: dbcontroller.Version}

// Kind takes an unqualified kind and returns back a Group qualified GroupKind

func Kind(kind string) schema.GroupKind {

return SchemeGroupVersion.WithKind(kind).GroupKind()

}

// Resource takes an unqualified resource and returns a Group qualified GroupResource

func Resource(resource string) schema.GroupResource {

return SchemeGroupVersion.WithResource(resource).GroupResource()

}

var (

// SchemeBuilder initializes a scheme builder

SchemeBuilder = runtime.NewSchemeBuilder(addKnownTypes)

// AddToScheme is a global function that registers this API group & version to a scheme

AddToScheme = SchemeBuilder.AddToScheme

)

// Adds the list of known types to Scheme.

func addKnownTypes(scheme *runtime.Scheme) error {

scheme.AddKnownTypes(SchemeGroupVersion,

&DatabaseManager{},

&DatabaseManagerList{},

)

metav1.AddToGroupVersion(scheme, SchemeGroupVersion)

return nil

}

register.go 的作用是通过 addKnownTypes 方法使得 client 可以知道 DatabaseManager 类型的 API 对象。

至此,自动生成代码的准备工作完成了,目前的代码目录结构如下:

# tree .

.

├── artifacts

│ └── database-manager

│ ├── crd.yaml

│ └── example-mysql.yaml

├── go.mod

├── go.sum

├── LICENSE

├── pkg

│ └── apis

│ └── databasemanager

│ ├── register.go

│ └── v1alpha1

│ ├── doc.go

│ ├── register.go

│ └── type.go

接下里就使用 code-generator 进行代码自动生成了。

8、创建生成代码的脚本

以下代码主要参考

sample-controller【3】

(1)在项目根目录下,创建 hack 目录,代码生成的脚本配置在该目录下

# mkdir hack && cd hack

(2)创建 tools.go 文件,添加 code-generator 依赖

//go:build tools

// +build tools

// This package imports things required by build scripts, to force `go mod` to see them as dependencies

package tools

import _ "k8s.io/code-generator"

(3)创建 update-codegen.sh 文件,用来生成代码

#!/usr/bin/env bash

set -o errexit

set -o nounset

set -o pipefail

SCRIPT_ROOT=$(dirname "${BASH_SOURCE[0]}")/..

CODEGEN_PKG=${CODEGEN_PKG:-$(cd "${SCRIPT_ROOT}"; ls -d -1 ./vendor/k8s.io/code-generator 2>/dev/null || echo ../code-generator)}

# generate the code with:

# --output-base because this script should also be able to run inside the vendor dir of

# k8s.io/kubernetes. The output-base is needed for the generators to output into the vendor dir

# instead of the $GOPATH directly. For normal projects this can be dropped.

bash "${CODEGEN_PKG}"/generate-groups.sh "deepcopy,client,informer,lister" \

database-manager-controller/pkg/client database-manager-controller/pkg/apis \

databasemanager:v1alpha1 \

--output-base "$(dirname "${BASH_SOURCE[0]}")/../.." \

--go-header-file "${SCRIPT_ROOT}"/hack/boilerplate.go.txt

# To use your own boilerplate text append:

# --go-header-file "${SCRIPT_ROOT}"/hack/custom-boilerplate.go.txt

其中以下代码段根据实际情况进行修改。

bash "${CODEGEN_PKG}"/generate-groups.sh "deepcopy,client,informer,lister" \

database-manager-controller/pkg/client database-manager-controller/pkg/apis \

databasemanager:v1alpha1 \

--output-base "$(dirname "${BASH_SOURCE[0]}")/../.." \

--go-header-file "${SCRIPT_ROOT}"/hack/boilerplate.go.txt

(4)创建 verify-codegen.sh 文件,主要用于校验生成的代码是否为最新的

#!/usr/bin/env bash

set -o errexit

set -o nounset

set -o pipefail

SCRIPT_ROOT=$(dirname "${BASH_SOURCE[0]}")/..

DIFFROOT="${SCRIPT_ROOT}/pkg"

TMP_DIFFROOT="${SCRIPT_ROOT}/_tmp/pkg"

_tmp="${SCRIPT_ROOT}/_tmp"

cleanup() {

rm -rf "${_tmp}"

}

trap "cleanup" EXIT SIGINT

cleanup

mkdir -p "${TMP_DIFFROOT}"

cp -a "${DIFFROOT}"/* "${TMP_DIFFROOT}"

"${SCRIPT_ROOT}/hack/update-codegen.sh"

echo "diffing ${DIFFROOT} against freshly generated codegen"

ret=0

diff -Naupr "${DIFFROOT}" "${TMP_DIFFROOT}" || ret=$?

cp -a "${TMP_DIFFROOT}"/* "${DIFFROOT}"

if [[ $ret -eq 0 ]]

then

echo "${DIFFROOT} up to date."

else

echo "${DIFFROOT} is out of date. Please run hack/update-codegen.sh"

exit 1

fi

(5)创建 boilerplate.go.txt,主要用于为代码添加开源协议

/*

Copyright The Kubernetes Authors.

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License.

*/

(6)配置 go vendor 依赖目录

从 update-codegen.sh 脚本可以看到该代码生成脚本是利用 vendor 目录下的依赖进行的,我们项目本身没有配置,执行以下命令进行创建。

# go mod vendor

(7)在项目根目录下执行脚本生成代码

# chmod +x hack/update-codegen.sh

# ./hack/update-codegen.sh

Generating deepcopy funcs

Generating clientset for databasemanager:v1alpha1 at database-manager-controller/pkg/client/clientset

Generating listers for databasemanager:v1alpha1 at database-manager-controller/pkg/client/listers

Generating informers for databasemanager:v1alpha1 at database-manager-controller/pkg/client/informers

然后新的目录结构如下:

# tree pkg/

pkg/

├── apis

│ └── databasemanager

│ ├── register.go

│ └── v1alpha1

│ ├── doc.go

│ ├── register.go

│ ├── type.go

│ └── zz_generated.deepcopy.go

└── client

├── clientset

│ └── versioned

│ ├── clientset.go

│ ├── doc.go

│ ├── fake

│ │ ├── clientset_generated.go

│ │ ├── doc.go

│ │ └── register.go

│ ├── scheme

│ │ ├── doc.go

│ │ └── register.go

│ └── typed

│ └── databasemanager

│ └── v1alpha1

│ ├── databasemanager_client.go

│ ├── databasemanager.go

│ ├── doc.go

│ ├── fake

│ │ ├── doc.go

│ │ ├── fake_databasemanager_client.go

│ │ └── fake_databasemanager.go

│ └── generated_expansion.go

├── informers

│ └── externalversions

│ ├── databasemanager

│ │ ├── interface.go

│ │ └── v1alpha1

│ │ ├── databasemanager.go

│ │ └── interface.go

│ ├── factory.go

│ ├── generic.go

│ └── internalinterfaces

│ └── factory_interfaces.go

└── listers

└── databasemanager

└── v1alpha1

├── databasemanager.go

└── expansion_generated.go

Controller 开发

上面已经完成了自动代码的生成,生成了 informer、lister、clientset 的代码,下面就开始编写真正的 Controller 功能了。

我们需要实现的功能是:

- 创建数据库实例

- 更新数据库实例

- 删除数据库实例

(1)在代码根目录创建 controller.go 文件,编写如下内容

package main

import (

"context"

dbmanagerv1 "database-manager-controller/pkg/apis/databasemanager/v1alpha1"

clientset "database-manager-controller/pkg/client/clientset/versioned"

dbmanagerscheme "database-manager-controller/pkg/client/clientset/versioned/scheme"

informers "database-manager-controller/pkg/client/informers/externalversions/databasemanager/v1alpha1"

listers "database-manager-controller/pkg/client/listers/databasemanager/v1alpha1"

"fmt"

"github.com/golang/glog"

appsv1 "k8s.io/api/apps/v1"

corev1 "k8s.io/api/core/v1"

"k8s.io/apimachinery/pkg/api/errors"

metav1 "k8s.io/apimachinery/pkg/apis/meta/v1"

"k8s.io/apimachinery/pkg/util/runtime"

utilruntime "k8s.io/apimachinery/pkg/util/runtime"

"k8s.io/apimachinery/pkg/util/wait"

appsinformers "k8s.io/client-go/informers/apps/v1"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/kubernetes/scheme"

typedcorev1 "k8s.io/client-go/kubernetes/typed/core/v1"

appslisters "k8s.io/client-go/listers/apps/v1"

"k8s.io/client-go/tools/cache"

"k8s.io/client-go/tools/record"

"k8s.io/client-go/util/workqueue"

"k8s.io/klog/v2"

"time"

)

const controllerAgentName = "database-manager-controller"

const (

// SuccessSynced 用来表示事件被成功同步

SuccessSynced = "Synced"

// MessageResourceSynced 表示事件被触发时的消息信息

MessageResourceSynced = "database manager synced successfully"

MessageResourceExists = "Resource %q already exists and is not managed by DatabaseManager"

ErrResourceExists = "ErrResourceExists"

)

type Controller struct {

// kubeclientset 是kubernetes的clientset

kubeclientset kubernetes.Interface

// dbmanagerclientset 是自己定义的API Group的clientset

dbmanagerclientset clientset.Interface

// deploymentsLister list deployment 对象

deploymentsLister appslisters.DeploymentLister

// deploymentsSynced 同步deployment对象

deploymentsSynced cache.InformerSynced

// dbmanagerLister list databasemanager 对象

dbmanagerLister listers.DatabaseManagerLister

// dbmanagerSynced 同步DatabaseManager对象

dbmanagerSynced cache.InformerSynced

// workqueue 限速的队列

workqueue workqueue.RateLimitingInterface

// recorder 事件记录器

recorder record.EventRecorder

}

// NewController 初始化Controller

func NewController(kubeclientset kubernetes.Interface, dbmanagerclientset clientset.Interface,

dbmanagerinformer informers.DatabaseManagerInformer, deploymentInformer appsinformers.DeploymentInformer) *Controller {

utilruntime.Must(dbmanagerscheme.AddToScheme(scheme.Scheme))

glog.V(4).Info("Create event broadcaster")

// 创建eventBroadcaster

eventBroadcaster := record.NewBroadcaster()

// 保存events到日志

eventBroadcaster.StartLogging(glog.Infof)

// 上报events到APIServer

eventBroadcaster.StartRecordingToSink(&typedcorev1.EventSinkImpl{Interface: kubeclientset.CoreV1().Events("")})

recorder := eventBroadcaster.NewRecorder(scheme.Scheme, corev1.EventSource{Component: controllerAgentName})

// 初始化Controller

controller := &Controller{

kubeclientset: kubeclientset,

dbmanagerclientset: dbmanagerclientset,

deploymentsLister: deploymentInformer.Lister(),

deploymentsSynced: deploymentInformer.Informer().HasSynced,

dbmanagerLister: dbmanagerinformer.Lister(),

dbmanagerSynced: dbmanagerinformer.Informer().HasSynced,

workqueue: workqueue.NewNamedRateLimitingQueue(workqueue.DefaultControllerRateLimiter(), "DatabaseManagers"),

recorder: recorder,

}

glog.Info("Start up event handlers")

// 注册Event Handler,分别对于添加、更新、删除事件,具体的操作由事件对应的API将其加入队列中

dbmanagerinformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: controller.enqueueDatabaseManager,

UpdateFunc: func(oldObj, newObj interface{}) {

oldDBManager := oldObj.(*dbmanagerv1.DatabaseManager)

newDBManager := newObj.(*dbmanagerv1.DatabaseManager)

if oldDBManager.ResourceVersion == newDBManager.ResourceVersion {

return

}

controller.enqueueDatabaseManager(newObj)

},

DeleteFunc: controller.enqueueDatabaseManagerForDelete,

})

// 注册Deployment Event Handler

deploymentInformer.Informer().AddEventHandler(cache.ResourceEventHandlerFuncs{

AddFunc: controller.handleObject,

UpdateFunc: func(old, new interface{}) {

newDepl := new.(*appsv1.Deployment)

oldDepl := old.(*appsv1.Deployment)

if newDepl.ResourceVersion == oldDepl.ResourceVersion {

// 如果没有改变,就返回

return

}

controller.handleObject(new)

},

DeleteFunc: controller.handleObject,

})

return controller

}

// Run 启动入口

func (c *Controller) Run(threadiness int, stopCh <-chan struct{}) error {

defer utilruntime.HandleCrash()

defer c.workqueue.ShuttingDown()

glog.Info("start controller, cache sync")

// 同步缓存数据

if ok := cache.WaitForCacheSync(stopCh, c.dbmanagerSynced); !ok {

return fmt.Errorf("failed to wait for caches to sync")

}

glog.Info("begin start worker thread")

// 开启work线程

for i := 0; i < threadiness; i++ {

go wait.Until(c.runWorker, time.Second, stopCh)

}

glog.Info("worker thread started!!!!!!")

<-stopCh

glog.Info("worker thread stopped!!!!!!")

return nil

}

// runWorker 是一个死循环,会一直调用processNextWorkItem从workqueue中取出数据

func (c *Controller) runWorker() {

for c.processNextWorkItem() {

}

}

// processNextWorkItem 从workqueue中取出数据进行处理

func (c *Controller) processNextWorkItem() bool {

obj, shutdown := c.workqueue.Get()

if shutdown {

return false

}

// We wrap this block in a func so we can defer c.workqueue.Done.

err := func(obj interface{}) error {

defer c.workqueue.Done(obj)

var key string

var ok bool

if key, ok = obj.(string); !ok {

c.workqueue.Forget(obj)

runtime.HandleError(fmt.Errorf("expected string in workqueue but got %#v", obj))

return nil

}

// 在syncHandler中处理业务

if err := c.syncHandler(key); err != nil {

return fmt.Errorf("error syncing '%s': %s", key, err.Error())

}

c.workqueue.Forget(obj)

glog.Infof("Successfully synced '%s'", key)

return nil

}(obj)

if err != nil {

runtime.HandleError(err)

return true

}

return true

}

// syncHandler 处理业务Handler

func (c *Controller) syncHandler(key string) error {

// 通过split得到namespace和name

namespace, name, err := cache.SplitMetaNamespaceKey(key)

if err != nil {

runtime.HandleError(fmt.Errorf("invalid resource key: %s", key))

return nil

}

// 从缓存中取对象

dbManager, err := c.dbmanagerLister.DatabaseManagers(namespace).Get(name)

if err != nil {

// 如果DatabaseManager对象被删除了,就会走到这里

if errors.IsNotFound(err) {

glog.Infof("DatabaseManager对象被删除,请在这里执行实际的删除业务: %s/%s ...", namespace, name)

return nil

}

runtime.HandleError(fmt.Errorf("failed to list DatabaseManager by: %s/%s", namespace, name))

return err

}

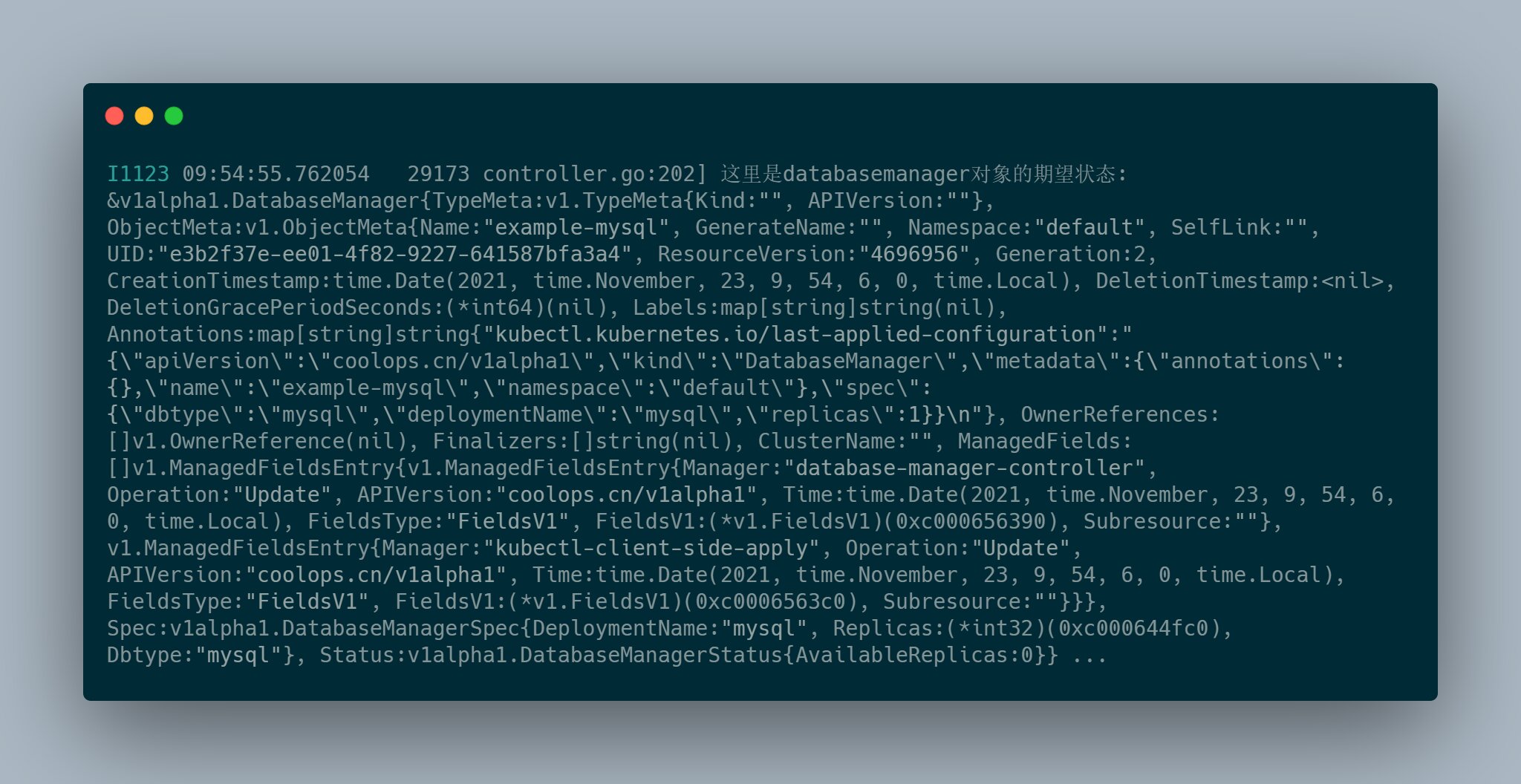

glog.Infof("这里是databasemanager对象的期望状态: %#v ...", dbManager)

// 获取是否有deploymentName

deploymentName := dbManager.Spec.DeploymentName

if deploymentName == "" {

utilruntime.HandleError(fmt.Errorf("%s: deploymentName 不能为空", key))

return nil

}

// 判断deployment是否在集群中存在

deployment, err := c.deploymentsLister.Deployments(dbManager.Namespace).Get(deploymentName)

if errors.IsNotFound(err) {

// 如果没有找到,就创建

deployment, err = c.kubeclientset.AppsV1().Deployments(dbManager.Namespace).Create(

context.TODO(), newDeployment(dbManager), metav1.CreateOptions{})

}

// 如果Create 或者 Get 都出错,则返回

if err != nil {

return err

}

// 如果这个deployment不是由DatabaseManager控制,应该报告这个事件

if !metav1.IsControlledBy(deployment, dbManager) {

msg := fmt.Sprintf(MessageResourceExists, deployment.Name)

c.recorder.Event(dbManager, corev1.EventTypeWarning, ErrResourceExists, msg)

return fmt.Errorf("%s", msg)

}

// 如果replicas和期望的不等,则更新deployment

if dbManager.Spec.Replicas != nil && *dbManager.Spec.Replicas != *deployment.Spec.Replicas {

klog.V(4).Infof("DatabaseManager %s replicas: %d, deployment replicas: %d", name, *dbManager.Spec.Replicas, *deployment.Spec.Replicas)

deployment, err = c.kubeclientset.AppsV1().Deployments(dbManager.Namespace).Update(context.TODO(), newDeployment(dbManager), metav1.UpdateOptions{})

}

if err != nil {

return err

}

// 更新状态

err = c.updateDatabaseManagerStatus(dbManager, deployment)

if err != nil {

return err

}

glog.Infof("实际状态是从业务层面得到的,此处应该去的实际状态,与期望状态做对比,并根据差异做出响应(新增或者删除)")

c.recorder.Event(dbManager, corev1.EventTypeNormal, SuccessSynced, MessageResourceSynced)

return nil

}

// updateDatabaseManagerStatus 更新DatabaseManager状态

func (c *Controller) updateDatabaseManagerStatus(dbmanager *dbmanagerv1.DatabaseManager, deployment *appsv1.Deployment) error {

dbmanagerCopy := dbmanager.DeepCopy()

dbmanagerCopy.Status.AvailableReplicas = deployment.Status.AvailableReplicas

_, err := c.dbmanagerclientset.CoolopsV1alpha1().DatabaseManagers(dbmanager.Namespace).Update(context.TODO(), dbmanagerCopy, metav1.UpdateOptions{})

return err

}

func (c *Controller) handleObject(obj interface{}) {

var object metav1.Object

var ok bool

if object, ok = obj.(metav1.Object); !ok {

tombstone, ok := obj.(cache.DeletedFinalStateUnknown)

if !ok {

utilruntime.HandleError(fmt.Errorf("error decoding object, invalid type"))

return

}

object, ok = tombstone.Obj.(metav1.Object)

if !ok {

utilruntime.HandleError(fmt.Errorf("error decoding object tombstone, invalid type"))

return

}

klog.V(4).Infof("Recovered deleted object '%s' from tombstone", object.GetName())

}

klog.V(4).Infof("Processing object: %s", object.GetName())

if ownerRef := metav1.GetControllerOf(object); ownerRef != nil {

// 检查对象是否和DatabaseManager对象关联,如果不是就退出

if ownerRef.Kind != "DatabaseManager" {

return

}

dbmanage, err := c.dbmanagerLister.DatabaseManagers(object.GetNamespace()).Get(ownerRef.Name)

if err != nil {

klog.V(4).Infof("ignoring orphaned object '%s' of databaseManager '%s'", object.GetSelfLink(), ownerRef.Name)

return

}

c.enqueueDatabaseManager(dbmanage)

return

}

}

func newDeployment(dbmanager *dbmanagerv1.DatabaseManager) *appsv1.Deployment {

var image string

var name string

switch dbmanager.Spec.Dbtype {

case "mysql":

image = "mysql:5.7"

name = "mysql"

case "mariadb":

image = "mariadb:10.7.1"

name = "mariadb"

default:

image = "mysql:5.7"

name = "mysql"

}

labels := map[string]string{

"app": dbmanager.Spec.Dbtype,

}

return &appsv1.Deployment{

ObjectMeta: metav1.ObjectMeta{

Namespace: dbmanager.Namespace,

Name: dbmanager.Name,

OwnerReferences: []metav1.OwnerReference{

*metav1.NewControllerRef(dbmanager, dbmanagerv1.SchemeGroupVersion.WithKind("DatabaseManager")),

},

},

Spec: appsv1.DeploymentSpec{

Replicas: dbmanager.Spec.Replicas,

Selector: &metav1.LabelSelector{MatchLabels: labels},

Template: corev1.PodTemplateSpec{

ObjectMeta: metav1.ObjectMeta{Labels: labels},

Spec: corev1.PodSpec{

Containers: []corev1.Container{

{

Name: name,

Image: image,

},

},

},

},

},

}

}

// 数据先放入缓存,再入队列

func (c *Controller) enqueueDatabaseManager(obj interface{}) {

var key string

var err error

// 将对象放入缓存

if key, err = cache.MetaNamespaceKeyFunc(obj); err != nil {

runtime.HandleError(err)

return

}

// 将key放入队列

c.workqueue.AddRateLimited(key)

}

// 删除操作

func (c *Controller) enqueueDatabaseManagerForDelete(obj interface{}) {

var key string

var err error

// 从缓存中删除指定对象

key, err = cache.DeletionHandlingMetaNamespaceKeyFunc(obj)

if err != nil {

runtime.HandleError(err)

return

}

//再将key放入队列

c.workqueue.AddRateLimited(key)

}

其主要逻辑和文章开头介绍的 Controller实现逻辑一样,其中关键点在于:

- 在

NewController方法中,定义了DatabaseManager和Deployment对象的 Event Handler,除了同步缓存外,还将对应的 Key 放入 queue 中。 - 实际处理业务的方法是

syncHandler,可以根据实际请求来编写代码以达到业务需求。

2、在项目根目录下创建 main.go,编写入口函数

(1)编写处理系统信号量的 Handler

这部分直接使用的 demo 中的代码【3】

(2)编写入口 main 函数

package main

import (

"flag"

"time"

kubeinformers "k8s.io/client-go/informers"

"k8s.io/client-go/kubernetes"

"k8s.io/client-go/tools/clientcmd"

"k8s.io/klog/v2"

clientset "database-manager-controller/pkg/client/clientset/versioned"

informers "database-manager-controller/pkg/client/informers/externalversions"

"database-manager-controller/pkg/signals"

)

var (

masterURL string

kubeconfig string

)

func main() {

// klog.InitFlags(nil)

flag.Parse()

// 设置处理系统信号的Channel

stopCh := signals.SetupSignalHandler()

// 处理入参

cfg, err := clientcmd.BuildConfigFromFlags(masterURL, kubeconfig)

if err != nil {

klog.Fatalf("Error building kubeconfig: %s", err.Error())

}

// 初始化kubeClient

kubeClient, err := kubernetes.NewForConfig(cfg)

if err != nil {

klog.Fatalf("Error building kubernetes clientset: %s", err.Error())

}

// 初始化dbmanagerClient

dbmanagerClient, err := clientset.NewForConfig(cfg)

if err != nil {

klog.Fatalf("Error building example clientset: %s", err.Error())

}

kubeInformerFactory := kubeinformers.NewSharedInformerFactory(kubeClient, time.Second*30)

dbmanagerInformerFactory := informers.NewSharedInformerFactory(dbmanagerClient, time.Second*30)

// 初始化controller

controller := NewController(kubeClient, dbmanagerClient,

dbmanagerInformerFactory.Coolops().V1alpha1().DatabaseManagers(), kubeInformerFactory.Apps().V1().Deployments())

// notice that there is no need to run Start methods in a separate goroutine. (i.e. go kubeInformerFactory.Start(stopCh)

// Start method is non-blocking and runs all registered informers in a dedicated goroutine.

kubeInformerFactory.Start(stopCh)

dbmanagerInformerFactory.Start(stopCh)

if err = controller.Run(2, stopCh); err != nil {

klog.Fatalf("Error running controller: %s", err.Error())

}

}

func init() {

flag.StringVar(&kubeconfig, "kubeconfig", "", "Path to a kubeconfig. Only required if out-of-cluster.")

flag.StringVar(&masterURL, "master", "", "The address of the Kubernetes API server. Overrides any value in kubeconfig. Only required if out-of-cluster.")

}

测试 Controller

1、在项目目录下添加一个 Makefile

build:

echo "build database manager controller"

CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build .

2、执行 make build 进行编译

# make build

echo "build database manager controller"

build database manager controller

CGO_ENABLED=0 GOOS=linux GOARCH=amd64 go build .

然后会输出 database-manager-controller 一个二进制文件。

3、运行 controller

# chmod +x database-manager-controller

# ./database-manager-controller -kubeconfig=$HOME/.kube/config -alsologtostderr=true

I1123 09:52:41.595726 29173 controller.go:81] Start up event handlers

I1123 09:52:41.597448 29173 controller.go:120] start controller, cache sync

I1123 09:52:41.699716 29173 controller.go:125] begin start worker thread

I1123 09:52:41.699737 29173 controller.go:130] worker thread started!!!!!!

4、创建一个 CRD 测试用例,观察日志以及是否创建 deployment

(1)测试样例如下

# cat example-mysql.yaml

apiVersion: coolops.cn/v1alpha1

kind: DatabaseManager

metadata:

name: example-mysql

spec:

dbtype: "mysql"

deploymentName: "mysql"

replicas: 1

(2)执行以下命令进行创建,观察日志

# kubectl apply -f example-mysql.yaml

databasemanager.coolops.cn/example-mysql created

可以看到对于的 deployment 和 pod 已经创建,不过由于 Deployment 的配置没有配置完全,mysql 没有正常启动。

我们其实是可以看到 Controller 获取到了事件。

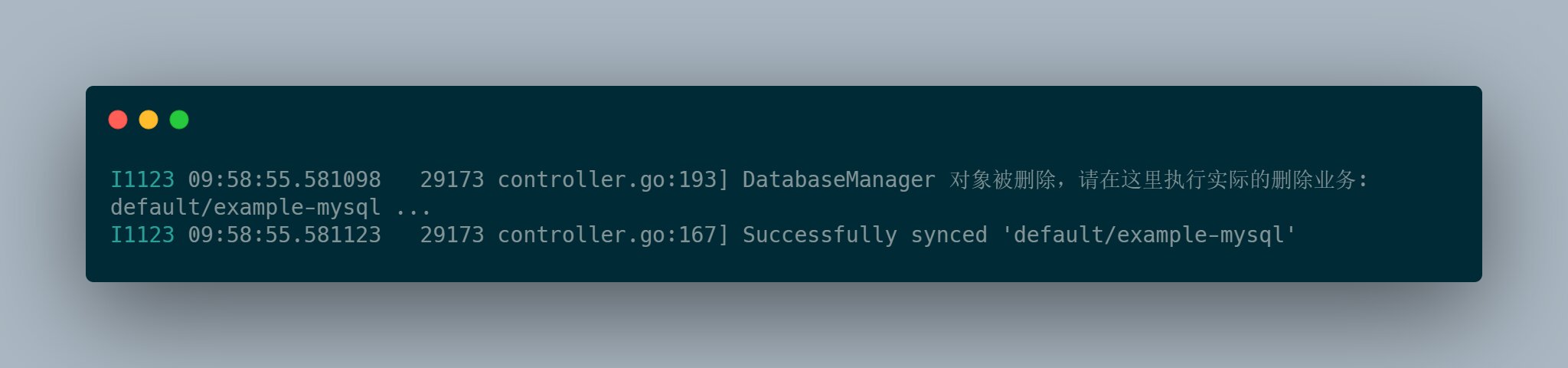

如果我们删除对象,也可以从日志里正常看到响应。

总结

上面就是自定义 Controller 的整个开发过程,相对来说还是比较简单,大部分东西社区都做好了,我们只需要套模子,然后实现自己的逻辑就行。

整个过程主要是参考 sample-controller【3】 ,现在简单整理如下:

- 确定好目的,然后创建 CRD,定义需要的对象

- 按规定编写代码,定义好 CRD 所需要的 type,然后使用 code-generator 进行代码自动生成,生成需要的 informer、lister、clientset。

- 编写 Controller,实现具体的业务逻辑

- 编写完成后就是验证,看看是否符合预期,根据具体情况再做进一步的调整

引用

【1】 https://github.com/kubernetes/code-generator.git

【2】 https://cloud.redhat.com/blog/kubernetes-deep-dive-code-generation-customresources

【3】 https://github.com/kubernetes/sample-controller.git

【4】 https://cloud.tencent.com/developer/article/1659440

【5】 https://www.bookstack.cn/read/source-code-reading-notes/kubernetes-k8s_events.md