黑盒监控

黑盒监控

黑盒监控既已用户的身份测试服务的外部可见性,常见的黑盒监控包括 HTTP 探针、 TCP 探针 等用于检测站点或者服务的可访问性,以及访问效率等。

黑盒相比于白盒的不同之处在于黑盒是以故障为导向的,当故障发生时能够快速发现故障;而白盒是侧重主动发现或预测潜在的问题。

Blackbox Exporter 是 Prometheus 社区提供的官方黑盒监控解决方案,其允许用户通过:HTTP、 HTTPS、 DNS、 TCP 以及 ICMP 的方式对网络进行探测。

同样首先需要在 Kubernetes 集群中运行 blackbox-exporter 服务,同样通过一个 ConfigMap 资源对象来为 Blackbox 提供配置,如下所示:(blackbox.yaml)

apiVersion: v1

kind: Service

metadata:

name: blackbox

namespace: monitoring

spec:

selector:

app: blackbox

ports:

- port: 9115

targetPort: 9115

---

apiVersion: v1

kind: ConfigMap

metadata:

name: blackbox-config

namespace: monitoring

data:

blackbox.yaml: |-

modules:

http_2xx:

prober: http

timeout: 10s

http:

valid_http_versions: ["HTTP/1.1", "HTTP/2"]

valid_status_codes: [200]

method: GET

preferred_ip_protocol: "ip4"

http_post_2xx:

prober: http

timeout: 10s

http:

valid_http_versions: ["HTTP/1.1", "HTTP/2"]

valid_status_codes: [200]

method: POST

preferred_ip_protocol: "ip4"

tcp_connect:

prober: tcp

timeout: 10s

ping:

prober: icmp

timeout: 5s

icmp:

preferred_ip_protocol: "ip4"

dns:

prober: dns

dns:

transport_protocol: "tcp"

preferred_ip_protocol: "ip4"

query_name: "kubernetes.defalut.svc.cluster.local"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: blackbox

namespace: monitoring

spec:

selector:

matchLabels:

app: blackbox

template:

metadata:

labels:

app: blackbox

spec:

containers:

- name: blackbox

image: prom/blackbox-exporter:v0.16.0

args:

- "--config.file=/etc/blackbox_exporter/blackbox.yaml"

- "--log.level=error"

ports:

- containerPort: 9115

volumeMounts:

- name: config

mountPath: /etc/blackbox_exporter

volumes:

- name: config

configMap:

name: blackbox-config

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

然后创建资源清单:

# kubectl apply -f .

service/blackbox created

configmap/blackbox-config created

deployment.apps/blackbox created

2

3

4

然后在 Prometheus 中加入 Blackbox 的抓取配置(因为我们是用的Prometheus operator (opens new window)部署的,所以就以 add 的形式加入配置,如下):

prometheus-additional.yaml

- job_name: "kubernetes-service-endpoints"

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels:

[__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: "kubernetes-service-dns"

metrics_path: /probe

params:

module: [dns]

static_configs:

- targets:

- kube-dns.kube-system:53

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox.monitoring:9115

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

然后重新创建 secret:

# kubectl delete secret additional-config -n monitoring

# kubectl -n monitoring create secret generic additional-config --from-file=prometheus-additional.yaml

2

然后重新加载一下 prometheus

# curl -X POST "http://10.68.215.41:9090/-/reload"

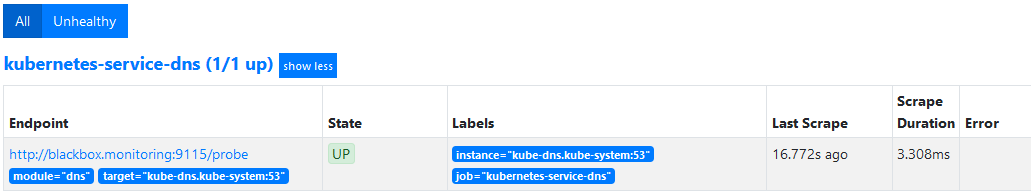

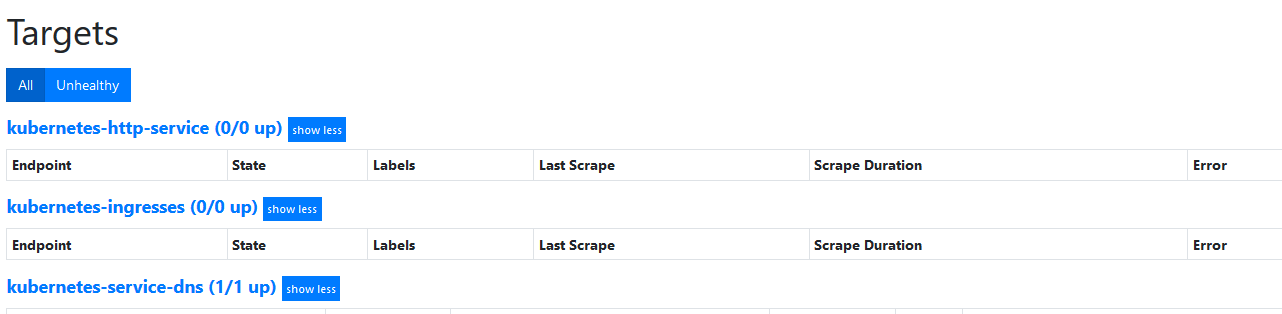

现在就可以在 targets 中看到已经发现。

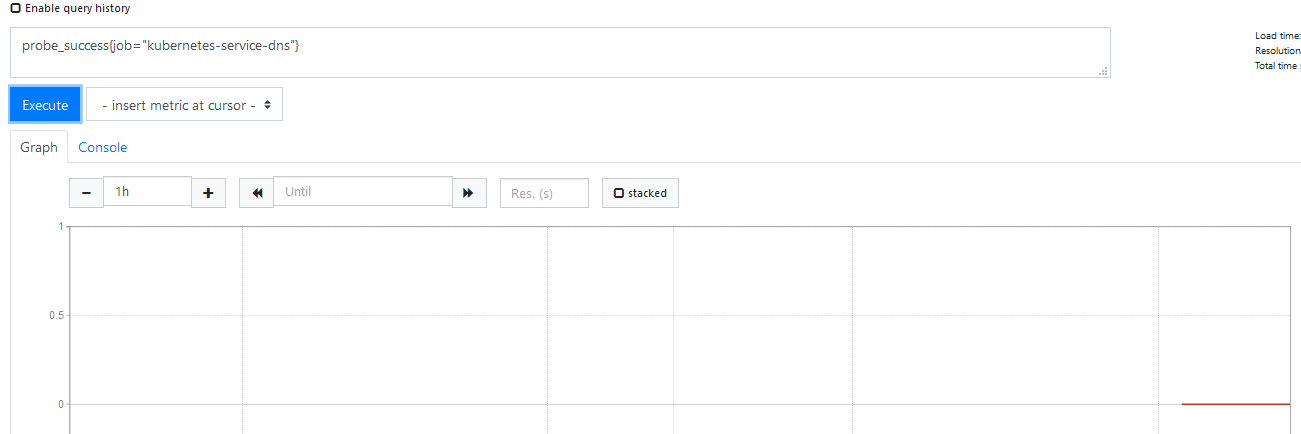

然后在 Graph 查看 probe_success{job="kubernetes-service-dns"}

除了 DNS 的配置外,上面我们还配置了一个 http_2xx 的模块,也就是 HTTP 探针,HTTP 探针是进行黑盒监控时最常用的探针之一,通过 HTTP 探针能够对网站或者 HTTP 服务建立有效的监控,包括其本身的可用性,以及用户体验相关的如响应时间等等。除了能够在服务出现异常的时候及时报警,还能帮助系统管理员分析和优化网站体验。这里我们可以使用他来对 http 服务进行检测。

因为前面已经给 Blackbox 配置了 http_2xx 模块,所以这里只需要在 Prometheus 中加入抓取任务,这里我们可以结合前面的 Prometheus 的服务发现功能来做黑盒监控,对于 Service 和 Ingress 类型的服务发现,用来进行黑盒监控是非常合适的,配置如下所示:

- job_name: "kubernetes-service-endpoints"

kubernetes_sd_configs:

- role: endpoints

relabel_configs:

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape]

action: keep

regex: true

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme]

action: replace

target_label: __scheme__

regex: (https?)

- source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path]

action: replace

target_label: __metrics_path__

regex: (.+)

- source_labels:

[__address__, __meta_kubernetes_service_annotation_prometheus_io_port]

action: replace

target_label: __address__

regex: ([^:]+)(?::\d+)?;(\d+)

replacement: $1:$2

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

action: replace

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

action: replace

target_label: kubernetes_name

- job_name: "kubernetes-service-dns"

metrics_path: /probe

params:

module: [dns]

static_configs:

- targets:

- kube-dns.kube-system:53

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: blackbox.monitoring:9115

- job_name: "kubernetes-http-service"

metrics_path: /probe

params:

module: [http_2xx]

kubernetes_sd_configs:

- role: service

relabel_configs:

- source_labels:

[__meta_kubernetes_service_annotation_prometheus_io_http_probe]

action: keep

regex: true

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: blackbox.monitoring:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

- job_name: "kubernetes-ingresses"

metrics_path: /probe

params:

module: [http_2xx]

kubernetes_sd_configs:

- role: ingress

relabel_configs:

- source_labels:

[__meta_kubernetes_ingress_annotation_prometheus_io_http_probe]

action: keep

regex: true

- source_labels:

[

__meta_kubernetes_ingress_scheme,

__address__,

__meta_kubernetes_ingress_path,

]

regex: (.+);(.+);(.+)

replacement: ${1}://${2}${3}

target_label: __param_target

- target_label: __address__

replacement: blackbox.monitoring:9115

- source_labels: [__param_target]

target_label: instance

- action: labelmap

regex: __meta_kubernetes_service_label_(.+)

- source_labels: [__meta_kubernetes_namespace]

target_label: kubernetes_namespace

- source_labels: [__meta_kubernetes_service_name]

target_label: kubernetes_name

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

然后重新创建 secret:

# kubectl delete secret additional-config -n monitoring

# kubectl -n monitoring create secret generic additional-config --from-file=prometheus-additional.yaml

2

然后重新加载一下 prometheus

# curl -X POST "http://10.68.215.41:9090/-/reload"

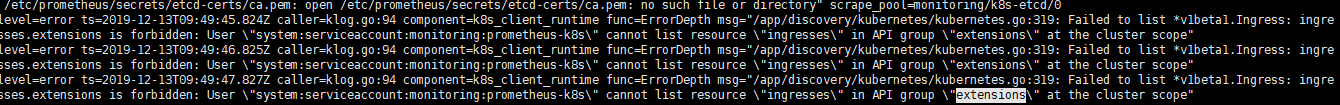

但是我们发现日志有报错如下

这是因为 RBAC 权限不足导致的,我们修改 prometheus-clusterRole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: prometheus-k8s

rules:

- apiGroups:

- ""

resources:

- nodes/metrics

- configmaps

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

- pods

- services

- endpoints

- nodes/proxy

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- ingresses

verbs:

- get

- list

- watch

- nonResourceURLs:

- /metrics

verbs:

- get

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

然后重新创建

# kubectl apply -f prometheus-clusterRole.yaml

然我们我们在面板查看

但是现在还没有任何数据,这是因为上面是匹配 __meta_kubernetes_ingress_annotation_prometheus_io_http_probe 这个元信息,所以如果我们需要让这两个任务发现的话需要在 Service 或者 Ingress 中配置对应的 annotation:

annotations:

prometheus.io/http-probe: "true"

2

比如:

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: redis

namespace: kube-ops

spec:

template:

metadata:

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9121"

labels:

app: redis

spec:

containers:

- name: redis

image: redis:4

resources:

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 6379

- name: redis-exporter

image: oliver006/redis_exporter:latest

resources:

requests:

cpu: 100m

memory: 100Mi

ports:

- containerPort: 9121

---

kind: Service

apiVersion: v1

metadata:

name: redis

namespace: kube-ops

annotations:

prometheus.io/scrape: "true"

prometheus.io/port: "9121"

prometheus.io/http-probe: "true"

spec:

selector:

app: redis

ports:

- name: redis

port: 6379

targetPort: 6379

- name: prom

port: 9121

targetPort: 9121

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

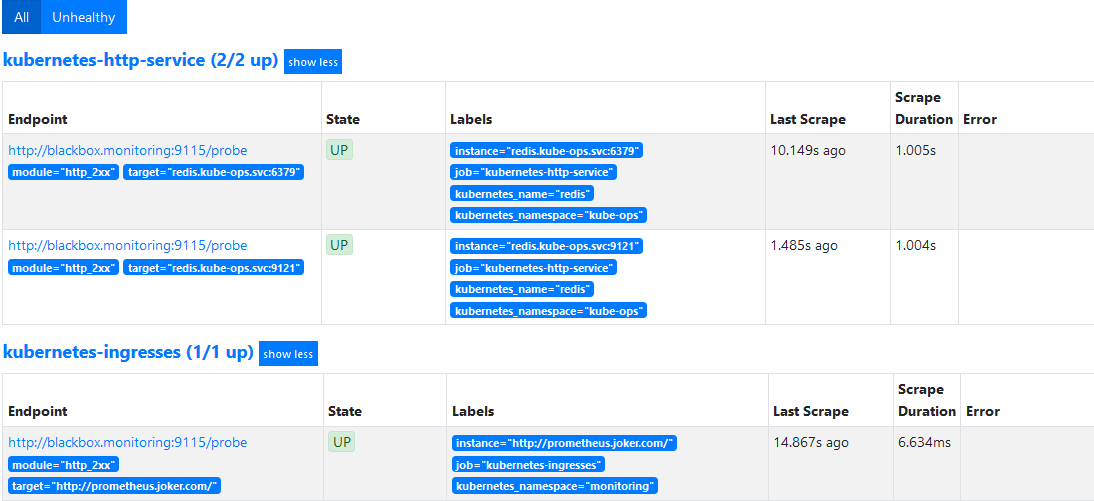

51

然后在 WEB 页面查看如下:

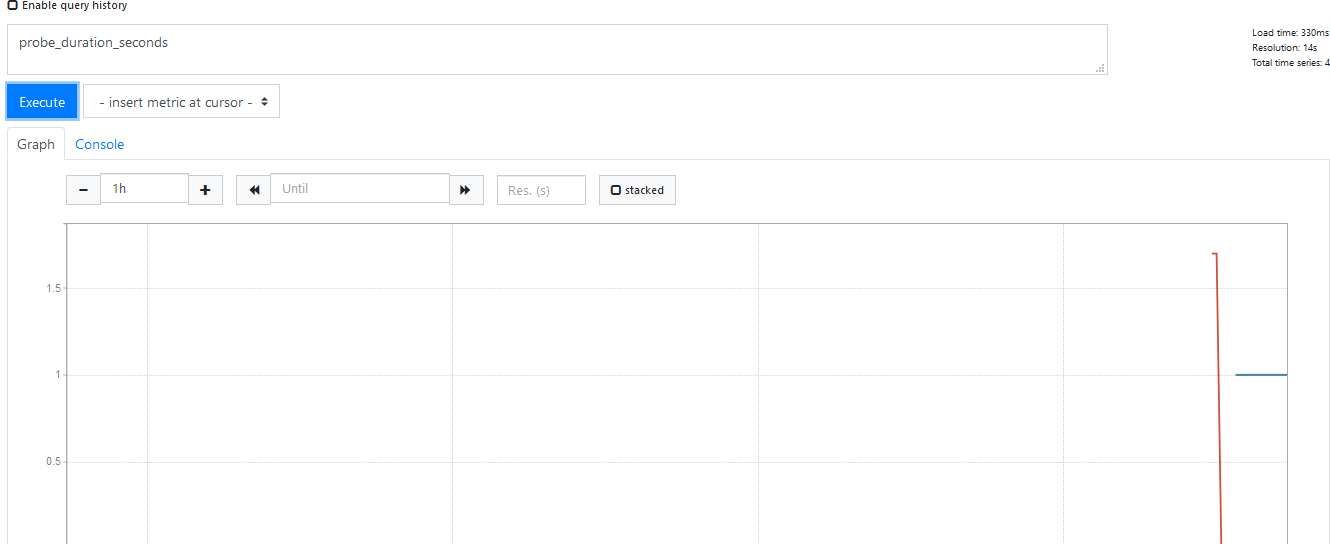

在 Graph 上也可以看到监控的指标:

如果你需要对监控的路径、端口这些做控制,我们可以自己在 relabel_configs 中去做相应的配置,比如我们想对 Service 的黑盒做自定义配置,可以想下面这样配置:

- source_labels:

[

__meta_kubernetes_service_name,

__meta_kubernetes_namespace,

__meta_kubernetes_service_annotation_prometheus_io_http_probe_port,

__meta_kubernetes_service_annotation_prometheus_io_http_probe_path,

]

action: replace

target_label: __param_target

regex: (.+);(.+);(.+);(.+)

replacement: $1.$2:$3$4

2

3

4

5

6

7

8

9

10

11

这样我们就需要在 Service 中配置这样的 annotation 了:

annotation:

prometheus.io/http-probe: "true"

prometheus.io/http-probe-port: "8080"

prometheus.io/http-probe-path: "/healthz"

2

3

4

# 附

# ping 检测

- job_name: 'ping_all'

scrape_interval: 1m

metrics_path: /probe

params:

module: [ping]

static_configs:

- targets:

- 192.168.1.2

labels:

instance: node2

- targets:

- 192.168.1.3

labels:

instance: node3

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: 127.0.0.1:9115 # black_exporter地址

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

# http 检测

- job_name: 'http_get_all' # blackbox_export module

scrape_interval: 30s

metrics_path: /probe

params:

module: [http_2xx]

static_configs:

- targets:

- https://www.coolops.cn

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: 127.0.0.1:9115 # black_exporter地址

2

3

4

5

6

7

8

9

10

11

12

13

14

15

# 监控主机存活状态

- job_name: node_status

metrics_path: /probe

params:

module: [icmp]

static_configs:

- targets: ['10.165.94.31']

labels:

instance: node_status

group: 'node'

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- target_label: __address__

replacement: 127.0.0.1:9115 # black_exporter地址

2

3

4

5

6

7

8

9

10

11

12

13

14

# 监控端口状态

- job_name: 'prometheus_port_status'

metrics_path: /probe

params:

module: [tcp_connect]

static_configs:

- targets: ['172.19.155.133:8765']

labels:

instance: 'port_status'

group: 'tcp'

relabel_configs:

- source_labels: [__address__]

target_label: __param_target

- source_labels: [__param_target]

target_label: instance

- target_label: __address__

replacement: 127.0.0.1:9115 # black_exporter地址

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

# 告警

groups:

- name: example

rules:

- alert: curlHttpStatus

expr: probe_http_status_code{job="blackbox-http"}>=400 and probe_success{job="blackbox-http"}==0

#for: 1m

labels:

docker: number

annotations:

summary: "业务报警: 网站不可访问"

description: "{{$labels.instance}} 不可访问,请及时查看,当前状态码为{{$value}}"

2

3

4

5

6

7

8

9

10

11

Prometheus 配置文件可以参考官方仓库:https://github.com/prometheus/prometheus/blob/master/documentation/examples/prometheus-kubernetes.yml (opens new window)

Blackbox 的配置文件可以参考官方参考:https://github.com/prometheus/blackbox_exporter/blob/master/example.yml (opens new window)